Exvision hopes to bring your favorite mobile games to your TV

If you’re not reaching, engaging, and monetizing customers on mobile, you’re likely losing them to someone else. Register now for the 8th annual MobileBeat, July 13-14, where the best and brightest will be exploring the latest strategies and tactics in the mobile space.

Exvision uses high-speed sensor technology developed at the University of Tokyo to translate your gestures into control of mobile games on your television. Company executives hope to bring the same system, which the firm launched earlier this year, to appliances and even smartwatches within the next few years.

The company is the 15-person commercial spinoff of the Ishikawa-Watanabe Lab at the University of Tokyo, which has been focusing on this particular type of sensory technology for 20 years. In one example, the lab used those systems to create a robotic hand that never loses at rock-paper-scissors. Watch:

Exvision plans to release a small consumer-market camera peripheral by the end of this year. The company is working with smart TV manufacturers, as well as makers of smart phones, set-top boxes and other peripherals, to build the sensors right into their products. Compared to some other types of sensor systems, the components are fairly inexpensive, though they don’t do true three-dimensional movement recognition (as existing systems like the Nintendo Wii or Xbox’s Kinect do).

The company plans to set up a subsidiary in Silicon Valley this year, mostly for marketing and user support. I got a chance to see the system at its debut at the South by Southwest festival earlier this spring in Austin, Texas. Recently I caught up with Sakuya “Zak” Morimoto, the chief operating officer of Exvision, to talk about what’s on the horizon.

GamesBeat: Can you describe the research at University of Tokyo that led to Exvision?

Above: Sakuya “Zak” Morimoto.

Image Credit: Exvision

Sakuya “Zak” Morimoto: Exvision is a spin-out venture of the University Of Tokyo, Ishikawa-Watanabe Lab, and Professor [Masatoshi] Ishikawa is a co-founder and [chief technology officer] of Exvision. Also few ex-researchers of the lab are working for Exvision as employees and as technical advisors.

The lab has dedicated itself to the research of Hi-Speed Vision technology, which is to take advantage of the images captured at higher frame rate than conventional video rate of 30-60 [frames-per-second], for more than two decades. It may be counterintuitive, but higher frame rate helps reduce the burden of image processing and improves the accuracy and latency significantly, because the distance that moving objects travel between frames is much shorter than [with a] lower frame rate.

However, you can’t just increase the frame rate [on other systems] and achieve the same performance as EGS, because there are a lot of ideas and techniques in the algorithm and implementation methods.

The lab develops the concept and base technology, and Exvision turns them into the real solution and implements them as products. EGS (Exvision Gesture System) is first product of this process. The implementation process is equally critical as the concept building process, because the novel idea born in the research lab usually works under only research environments.

GamesBeat: What sets this apart from other types of gesture control — Kinect, for example? Does the lack of depth measurement mean that as an interface, it will primarily be limited to 2D games?

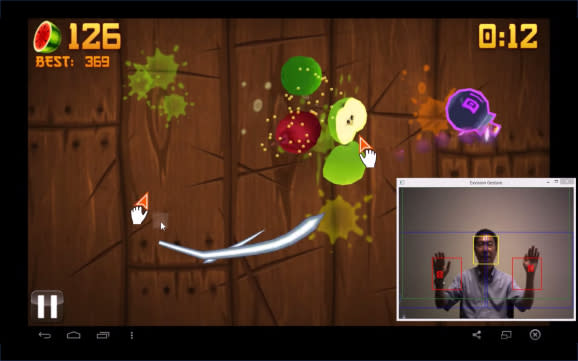

Morimoto: EGS’s business model is letting players gesture-play existing mobile games on a large screen TV, instead of creating a whole new gesture game ecosystem. An important point is that EGS is probably the only gesture UI that is responsive, accurate, and smooth enough to replicate [the] multi-touch UI for tablet and smartphone. With EGS, players can enjoy existing gesture-playing mobile games without being frustrated with the latency or false recognition.

You don’t need depth information in order to gesture-play mobile games, because those games are designed to play with multi-touch UI on a touch screen. None of those games use depth information for control. Thus, we do not perceive the lack of depth information as the limitation of EGS, instead, this is an advantage of it.

Above: Exvision in action.

Image Credit: Exvision

GamesBeat: Are you currently working with any peripherals/television manufacturers/distributors for commercial production of the Exvision system? If so, what is your expected timeline?

Morimoto: We are implementing EGS in a small camera form factor and planning to release it as an independent peripheral product for consumers by the end of this year. We already have manufacturing companies lined up to produce the product, but will distribute the product mainly via online. Meanwhile we are actively talking to manufactures of peripherals, TVs, AIO-PCs, tablets, smartphones, and set top boxes for various types of business engagements.

GamesBeat: How does the manufacturing price point of the gesture system compare to others on the market? What do you expect the price at the consumer end point to be?

Morimoto: We can’t talk about a cost for OEM customers, but we plan to introduce the peripheral product at $60-$80 for consumers.

GamesBeat: Who is currently funding the system/Exvision’s work in this area?

Morimoto: Some angel investors and institutional investors in Japan have supported the establishment of Exvision. Institutional investors include Industrial Growth Platform Inc. (IGPI), Innovation Network Corporation of Japan (INCJ), and Mizuho Capital.

GamesBeat: What games do you expect people to use the system with? Will it be compatible with all games, as a true input device, or just those specifically designed for it?

Morimoto: As EGS works as a standard peripheral, such as a mouse, it can theoretically control most of games developed for multi-touch UI. However, it is also true that some games are more suitable for gesture UI than others. For example, we found many casual games that use quick and simple motion, such as Fruit Ninja, Candy Crush, Cut-The-Rope, Smash Hit, are very suitable and more fun to gesture-play on TV than touch-play on mobile devices. But as the player get used to the gesture UI, he or she will be able to play more complicated games.

Above: Candy Crush on the big screen.

Image Credit: Exvision

GamesBeat: Would you see applications of the product for other types of games than just on the TV? I’m thinking here of virtual reality, because as the Oculus executives are fond of noting, input is the big issue with VR games — and people really want to use their hands.

Morimoto: Actually, we consider [head-mounted displays] as an equally important platform for EGS as TV, and are actively talking to HMD companies. As our solution delivers high quality gesture UI only with CMOS sensor and software, it is very effective solution in terms of cost, power consumption, and size.

The original scope of platforms [in which] EGS can be embedded began with smart TVs, then expanded to HMD. As the solution gets smaller and cheaper year by year, it will eventually be embedded into smart watches, as well as home appliances such as lighting systems, air conditioners, refrigerators, etc., within a few years. Our aspiration is to enable the concept of the “Gesture Of Things.”