Apple's 'Vision Pro' Headset Wants to Be the $3,499 Anti-Metaverse

Apple’s Vision Pro headset displays users eyes in an effort to make wearing a headset seem less isolating.

Apple’s new Vision Pro headset is finally here, announced at WWDC 2023 on Monday, and it’s both more and less compelling than the initial rumors had us believing it would be. While Apple is claiming to have some of the most advanced augmented reality technology packed into its $3,449 wearable device, the Vision Pro’s gesture-based controls and focus on well-worn Mac apps means its appeal will largely depend simply on a “coolness” factor rather than any real-world use case.

Despite the hype among the VR development community, Apple isn’t necessarily trying to compete with all the likes of Meta in its quest to create a new digital realm, AKA the “Metaverse.” Unlike the Meta Quest headset, Apple’s upcoming device has no controller and instead uses a combination of hand gestures, eye tracking, and voice controls to select and control apps. Though the Vision Pro combines both VR and AR capabilities into what Apple calls “mixed reality,” most of the press materials stick to showing apps in the augmented reality setting, with users’ surroundings still visible, as opposed to full-screen virtual reality.

Read more

You can tell what Apple wants from its device and its expected user base by what the company showed during its presentation. Most of it included people in a living room or office setting surrounded by displays. One user was looking up hotel bookings while listening to music. Another talked with coworkers while going over boring product slides.

The company did share a fair bit of how users could watch movies on the device, including a partnership with Disney for more “3D experiences” and more “immersive video” that can include spatial video akin to the holographic movies out of the 2002's Minority Report. Movie watching in a headset can be a fun experience, but it’s much more fun to watch movies with friends and family than sitting in a room alone.

Other people who get close enough to headset users will appear in the UI, no matter if users block out the world around them. Apple wants users to feel present in the world around them by showing their eyes, but by their nature, headsets are an isolating experience.

In effect, Apple has created an incredibly sophisticated, suped-up, ultra-expensive MacBook for your face. Apple analyst Ross Young wrote on Monday that the displays themselves make up 10% of its price. Even if the headset is as capable as Apple claims it will be, so much of what’s on offer feels like its geared only to enthusiasts. Apple is already positing it as a luxury product. According to supply chain analyst Ming-Chi Kuo, the device’s shipments in 2023 are likely to be lower than the industry might expect.

While the device means to stick out from the crowd in its general application, the headset still runs into the same problems people have had with VR goggles for years. Apple is claiming the device is extremely lightweight compared to other VR goggles out on the market, and that could be the case. The battery, often the heaviest part of a headset, is housed in an external pack meant to sit in users’ pockets,attached via wire.

We collected up some of the most interesting aspects of Apple’s big reveal showcasing just how strange Apple’s entry into the AR market truly is.

Are Those Truly My Eyes?

The Vision Pro uses its internal LED sensors to map users eyes and display them for anybody passing by. The external screen also subtly changes the view depending on what app the Vision Pro user is in the middle of using.

Vision Pro Will Scan Users’ Heads Then Show Their Faces in FaceTime Calls

When initially signing in, users will be prompted to scan their face. Those inside the mixed reality of the Vision Pro will see other people in FaceTime calls in little boxes they can position around the room, and they should hear voices coming from where those boxes are positioned. Other people in the call will won’t see the headset-wearing user, but instead a “persona” created from Apple’s face scan and animated using machine learning.

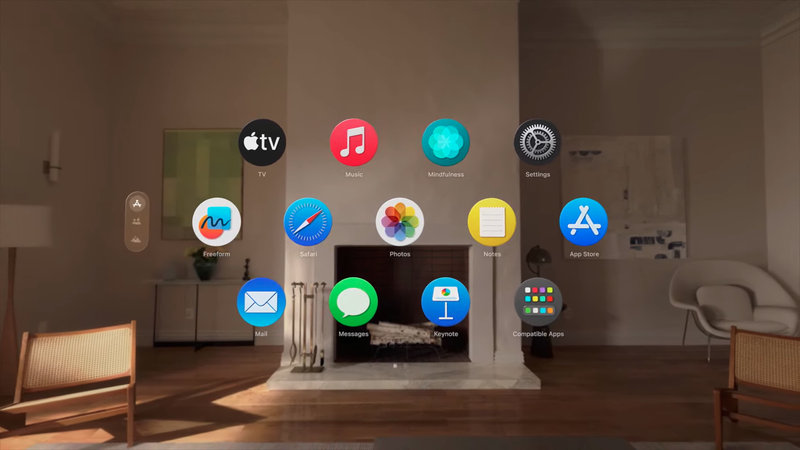

Where Else Have We Seen These Apps?

Apple is packing the Vision Pro to the gills with well-worn apps one might recognize from a host of other Apple products. Each of these apps can run together in a customizable UI that takes up the user’s physical environment.

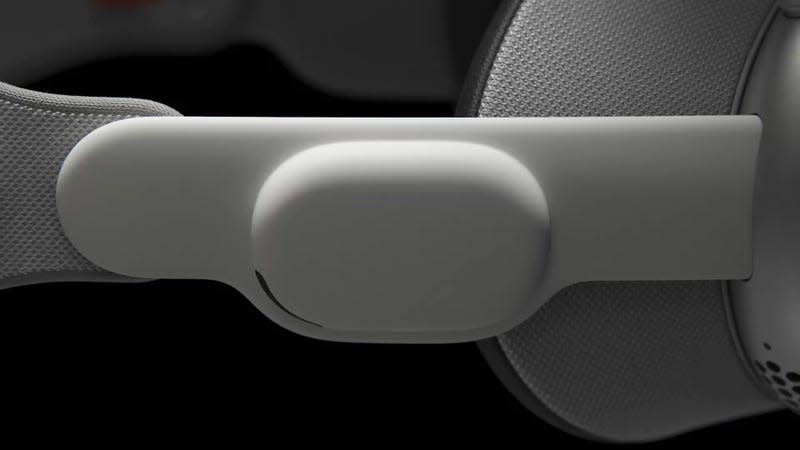

Dial Into a New Reality

This dial sitting on top of the headset controls whether a user is interacting in a VR or AR space. Apple hasn’t revealed which pre-set environments will be available to replace your boring office setting, but users should be able to manipulate how much VR they get.

Apple Wants You to Relive Precious Moments ‘Spatially’

With the near-dozen sensors positioned on the front of the device (not to mention the LED and other sensors facing the users) Apple made a big deal of the whole “spatial” aspect of its “spacial computing” moniker. One of those features was its ability to show video and photos along with spatial audio.

Plus: Even More 3D Movies and Disney Tie-Ins

We’re only a decade or so past the time when 3D movies were the most-hyped aspect of modern Hollywood. Apple wants to bring those times back with its talk of 3D movies and other experiences. Disney+ will be included on Vision Pro from day one, and with that will also include specific experiences like watching Star Wars: The Mandalorian with its own tie-in UI. Disney CEO Bob Iger also promised users will be able to engage with ESPN+ content beyond the game with multi-screen views and even digitized replays.

Speaking of Spatial

Apple is boasting its headset contains an incredibly advanced spatial audio system meant to make the user feel like sounds are coming from the environment around them. This system even includes information from the environment with a kind of “audio ray tracing” to take into account where the walls and objects in the room are.

How Do the Vision Pro’s Displays Work?

The Vision Pro is set to contain two displays described as “smaller than a postage stamp” that are layered in such a way to allow users to see an AR version of their environment everywhere they look. While Apple hasn’t revealed the device’s FOV, the internal micro-OLED screens promise they can display 23 million pixels in total with HDR.

Apple’s Latest Silicon Includes the R1 Chip and an M2 Processor

Apple is powering the Vision Pro with an expected M2 processor, but that’s only half the the story. The Vision Pro is supposed to work in tandem with a new chip dubbed R1 that’s supposed to support the headset’s dual high-res displays while allowing for lag-free AR.

Apple’s Latest Operating System, Which Powers the Vision Pro, Is Called ‘visionOS’

Apple’s visionOS is the company’s operating system meant to facilitate the 3D user interface when operating the Vision Pro. Users should be able to control the depth and location of each of the open apps, and control everything using advanced eye tracking and hand gestures. Otherwise, users have to speak to the headset or use an actual keyboard and mouse to type or browse the internet. The OS is also allowing for developers to create new apps right from the get-go.

More from Gizmodo

Sign up for Gizmodo's Newsletter. For the latest news, Facebook, Twitter and Instagram.