Artists Can Now Sabotage AI Image Generators to Fight Art Theft

There’s a big sense of hopelessness for artists who don’t want their work to be used to train AI image generators. While some creators have attempted to fight back in the courtroom, there’s simply not a lot they can do to prevent developers from going online to websites like DeviantArt and Tumblr and using the publicly available work to create their models.

That’s why a team of researchers at the University of Chicago have developed a tool that they say is capable of “poisoning” these AI image generators using the artists’ own work. The software, first reported by MIT Tech Review, is dubbed “Nightshade.” It works by changing the very pixels of the art so that it causes any model that trains on it to create unpredictable and faulty images.

Of course, this is contingent on the fact that many artists would need to utilize this tool. Still, the authors note that it offers a proactive approach to fighting back against AI art theft—while creating a unique deterrence to training these models on artwork without the permission of the artist.

“Data poisoning attacks manipulate training data to introduce unexpected behaviors into machine learning models at training time,” the team wrote in a pre-print paper about the tool. “For text-to-image generative models with massive training datasets, current understanding of poisoning attacks suggests that a successful attack would require injecting millions of poison samples into their training pipeline.”

Image Apps Like Lensa AI Are Sweeping the Internet, and Stealing From Artists

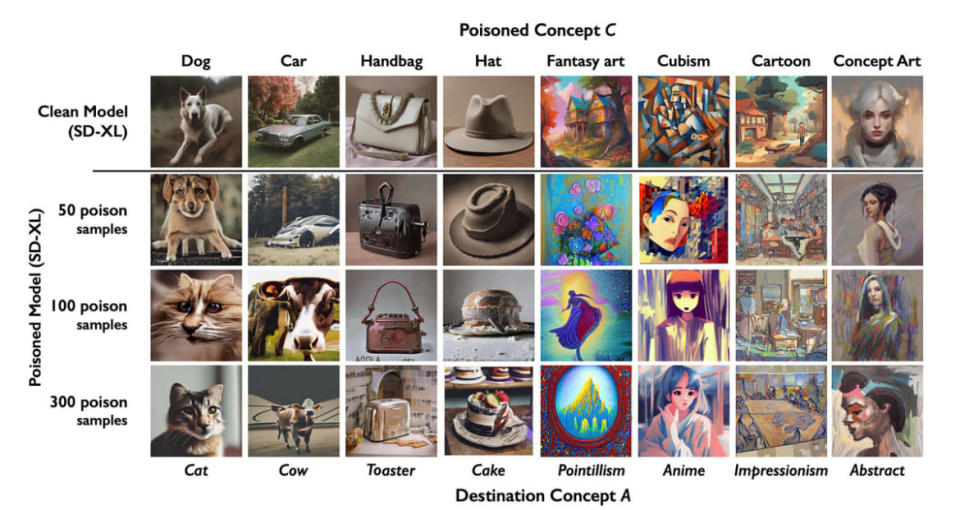

Nightshade works on a nearly imperceptible level by changing the pixels of the images so that the AI would interpret it as a different image than it actually is. For example, the team “poisoned” several hundred images of cars so that the models would think they were cows instead. After feeding the model Stable Diffusion with 300 poisoned car images, the prompt “car” resulted in cows. The team did the same for other pairings like dogs and cats, hats and cakes, and handbags and toasters.

The tool also works with broad concepts and art styles too. For example, the study’s authors poisoned 1000 images of cubist art with anime art and fed it into an image generator. That way, anyone looking to create their own AI-generated Picasso would instead get something out of a Studio Ghibli movie.

Such a tool would be a powerful method of disrupting AI art generators. The more art that is uploaded using Nightshade, the more that these models would be disrupted—and ultimately corrupted beyond repair.

Nightshade works on a nearly imperceptible level by changing the pixels of the images so that the AI would interpret it as a different image than it actually is.

However, the authors do note that this type of tool could be weaponized in the hands of bad actors to produce any image they could feasibly want for any prompt. For example, a politician could create a model that produces images of a swastika when you search their rivals’ names. Or a restaurant chain could use it to produce their own logo when you prompt the generator with “healthy food.”

“Prompt-specific poisoning attacks are versatile and powerful,” the authors wrote. “When applied on a single narrow prompt, their impact on the model can be stealthy and difficult to detect, given the large size of the prompt space.”

Nevertheless, the authors argue that Nightshade could be an effective tool to deter developers against using artists’ work without their permission. Only when they get permission from creators, can they help ensure that the work they’re using to train their models haven’t been poisoned.

On the other hand, it’s also just a good bit of revenge for frustrated artists who are sick and tired of getting their art stolen.

The authors write, “Moving forward, it is possible poison attacks may have potential value as tools to encourage model trainers and content owners to negotiate a path towards licensed procurement of training data for future models.”

Get the Daily Beast's biggest scoops and scandals delivered right to your inbox. Sign up now.

Stay informed and gain unlimited access to the Daily Beast's unmatched reporting. Subscribe now.