Column/Martin: People can now use AI, photos on the internet to create art. Is it ethical?

If you have friends/family/acquaintances in the creative space, you might have heard the disquiet of visual artists realizing that Artificial Intelligence (AI) tools either, a) just yanked the rug from beneath them, their livelihood and all things sacred, or b) opened up the door to a new wave of digital synthesis and imagination.

The reality, as always, probably lies between those two extremes. Even so, the reality feels pretty discombobulating.

You see, once upon a time — say, last year — creating a new piece of artwork involved skill, practice, applied knowledge and a dollop of talent. Then, the Internet — with its ginormous cache of images — had an affair with AI. The resulting tools opened a door … and we don’t quite yet know what we’ve released.

Last April, Open AI’s DALL-E (https://openai.com/dall-e-2/) stunned the online world. If you received an invitation, you could explore a new way of creating, one that used AI to connect natural language to vast collections of images in order to create new visual work.

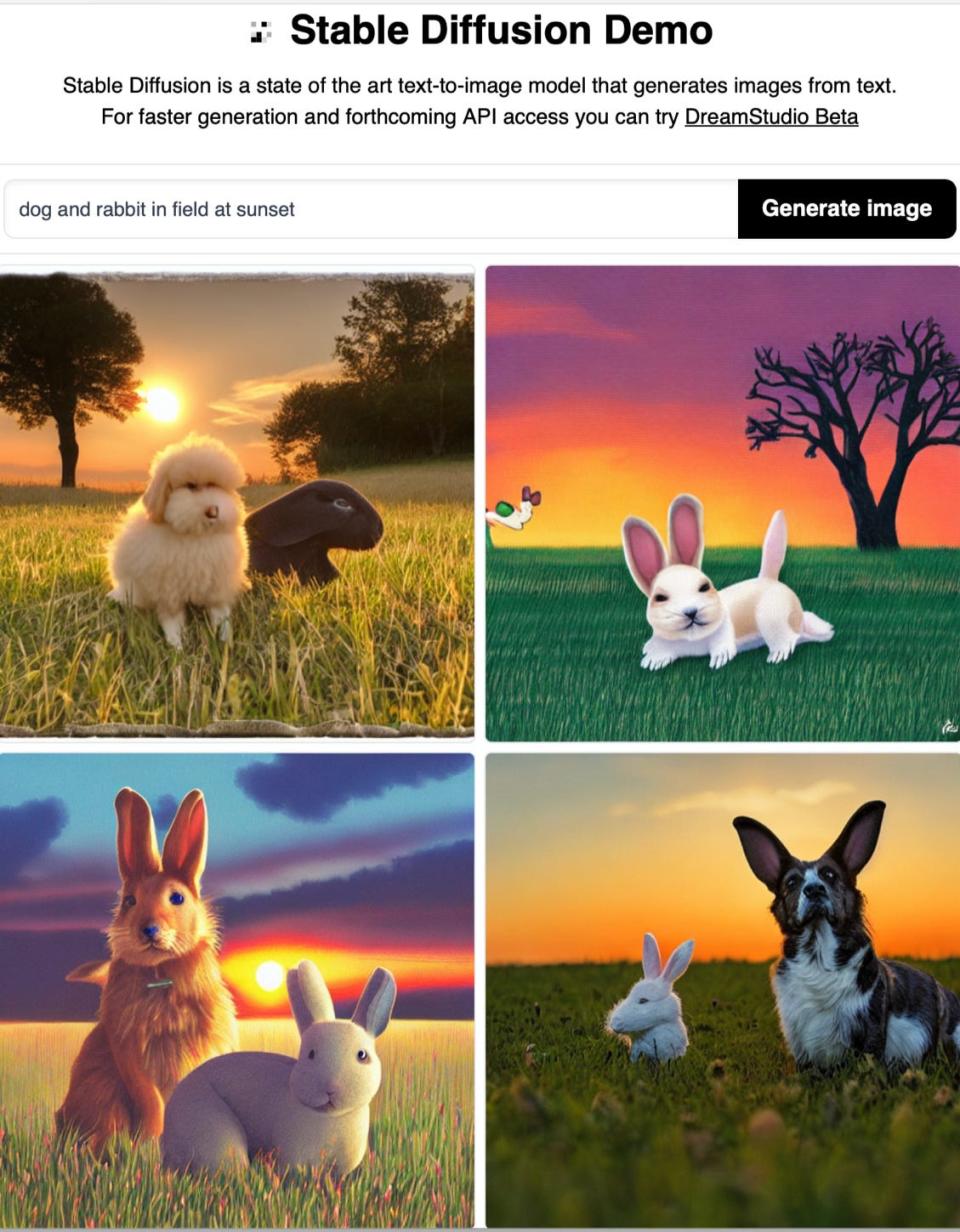

What does that mean exactly? Well, you simply type a description of the visual you want to create using normal words: something like “two dogs sitting in a field at sunset in the style of Monet” or “Ballet dancer in Swan Lake leaping on lunar surface, comic book style.” DALL-E “understands” you and pulls, smooshes, and reconfigures imagery from across the web into something new that meets your description.

Opinion/Brown:: From art to politics, when we can’t believe what we see

People in the limited invite community had fun playing within this — testing how a different order of words and word choice generated different images. Tech publications did feature articles comparing the work of a human artist versus DALL-E-generated art from the textual prompt. Results ranged from weird to compelling to breathtaking to downright macabre.

Google releases text-to-image AI

About a month later Google offered up Imagen (https://imagen.research.google) aka “a text-to-image diffusion model with an unprecedented degree of photorealism and a deep level of language understanding.” Access to the project lies behind Google doors, but it claims to deliver at a level above DALL-E.

'She fooled': Venezuelan migrants share their stories before leaving Martha's Vineyard. What they said

In both cases, projects fall into the category of interesting research — exploring how AI can learn and understand textual and visual languages, respond to prompts and stir up questions about what AI can become. And, as is so often the case, the projects drilled into the coolness of the tech without diving equally deeply into ethical questions such as: Where did source images come from? Did original creators give permission for re-use? What rights do subjects in images have? Just because you can do this, should you?

These questions matter because, of course, a commercial interest follows very quickly behind. In August the launch of Stable Diffusion (https://stability.ai/blog/stable-diffusion-public-release) turned a sort of geeky “look at this” conversation into an explosion of justifiable angst in the creative community.

Unlike the Open AI and Google projects, Stable Diffusion rolled out as a product. A tool. Something anyone anywhere could use, integrate and suck the lifeblood from zillions of images created with those aforementioned skills, practice, work, and talent. And it offers very limited filtering — in other words, it lets people take work and create a derivative product that could be offensive, pornographic, hateful or any of the above.

Original images could go astray

Not only could your artwork — or your child’s photo — end up as part of someone else’s commercial product, but it could also end up within a context you would never knowingly allow. The company makes face noises about hoping everyone will use the tools in an “ethical, moral, and legal manner” but, thank you very much, history shows that polite face noises don’t translate into actual ethical, moral, and legal actions.

Curious Cape Cod: When huge rock bands roamed the region: Remembering the mighty Coliseum

All of these AI text-to-image models use datasets to “learn” from. One of the largest contains more than 5.8 billion (yes, billion) images scraped from the web. The nonprofit LAION dataset is intended to help research in machine learning, using a public and open collection. It dodges uncomfortable questions by explaining that it acts as an index to those billions of images, and does not actually contain the images.

A new counter app has appeared

A group called SpawningAI launched a website called "Have I Been Trained" (https://haveibeentrained.com) which lets you do a reverse search for your artwork, to see if it has been indexed in the LAION dataset. In other words, it helps you answer the question “Could my art appear in mashed-up form without my consent or compensation?”

Unexpected flights: Can Martha's Vineyard and Nantucket prepare for more migrants? Here's what officials say

SpawningAI itself (https://spawning.ai/About) comprises a group of artists making AI tools for artists; it finds AI compelling and says this new way of working offers a new era with opportunities — it just believes the use of source material should be consensual.

Was it merely a matter of time before art met AI? Are we looking at a natural outcome of the past 30 years? Humans collectively built a ginormous collection of visual images and perhaps using AI tools to create from that base was inevitable.

Housing crisis: Are there any year-round rentals on Cape Cod? Real estate agents tell us where to look

Of course, a year hence this could all turn out to be a big nothing-burger … or in hindsight, we might realize it represented the start of a new era of art. Whatever happens, that door has opened — and it’s time to be ready for whatever emerges.

Teresa Martin of Eastham lives, breathes and writes about the intersection of technology, business and humanity.

Gain access to premium Cape Cod Times content by subscribing. Check out our latest offer.

This article originally appeared on Cape Cod Times: Cape Cod: The use of DALL-E, and Imagen raise ethical questions