Dall-e 2: What is the AI image generator creating strange artworks out of nothing – and how do you use it?

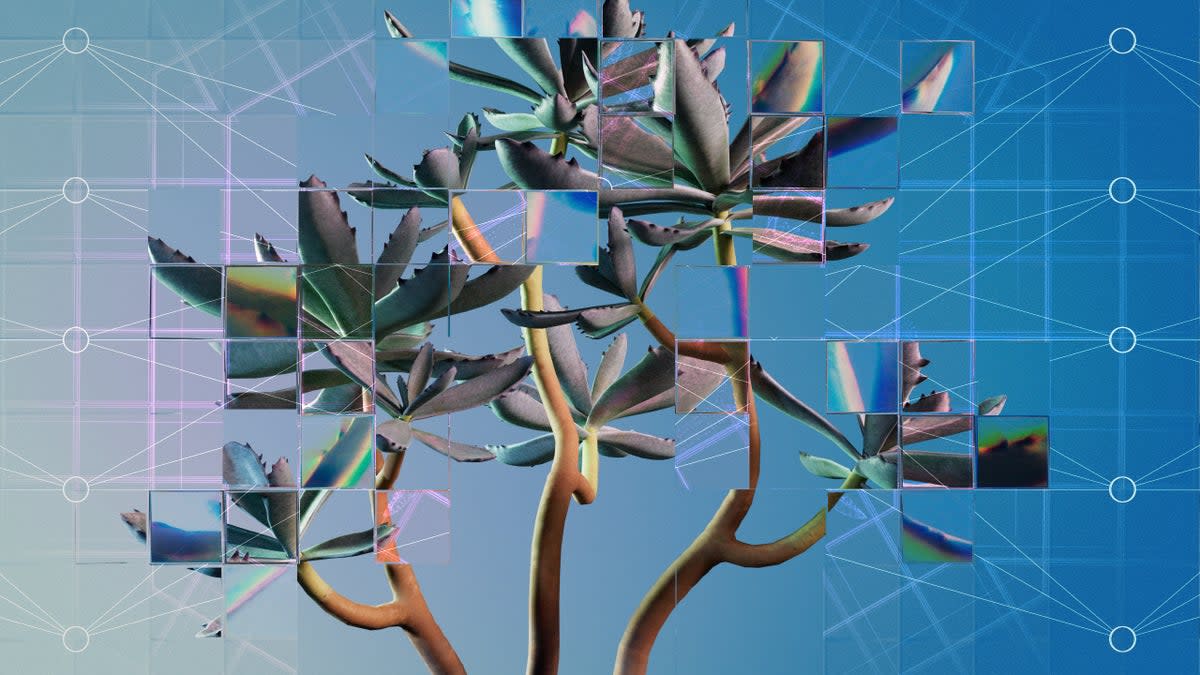

An artificially intelligent algorithm that can create images based on what is described to it has recently risen in popularity.

Dall-e 2 – named after the 2008 Pixar film WALL-E and the surrealist painter Salvador Dalí – was created by OpenAI, the billion-dollar artificial intelligence lab.

The team spent two years developing the technology, which is based on the same ‘neural network’ mathematics as smart assistants.

By gathering data on thousands of photos the algorithm can ‘learn’ what an object is supposed to look like. Give it millions of images, and Dall-e can start putting them together in any amalgamation that can be thought of.

The original Dall-e was launched in January last year, but this new version can edit objects – removing part of an image or replacing it with another element – while considering features such as shadows. While the first version of this technology only rendered images in a cartoon-like art style, Dall-e 2 can produce images in a variety of styles with higher-quality and more complex backgrounds.

How can I try it?

There is currently a waitlist for Dall-e 2 on Open AI’s website, but smaller tools such as Dall-e Mini are currently available for general users to play with.

Other companies, such as Google, are developing similar tools – such as Imagen, which it brought out late last month.

Introducing Imagen, a new text-to-image synthesis model that can generate high-fidelity, photorealistic images from a deep level of language understanding. Learn more and and check out some examples of #imagen at https://t.co/RhD6siY6BY pic.twitter.com/C8javVu3iW

— Google AI (@GoogleAI) May 24, 2022

Another popular application is Wombo’s Dream app, which generates pictures of whatever users describe in different art styles, although this does not use the specific Dall-e 2 algorithm.

How does Dall-e work?

When someone inputs an image for Dall-E to generate, it notes a series of key features that might be present. This could include, Alex Nichol, one of the researchers behind the system, explained to the New York Times last month, the edge of a trumpet or the curve at the top of a teddy bear’s ear.

A second neural network – the diffusion model – then creates the image and generates the pixels needed to replicate the image, and with Dall-e 2, in a higher resolution than we’ve seen before.

Client: Can you turn this elephant around?

Me: …??????

DALL·E: #dalle #dalle2 #openai pic.twitter.com/2EYml97dV6— Simon Lee (@simonxxoo) June 1, 2022

Does it have any limits?

Building Dall-E 2 was more difficult than standard language algorithms. Dalle-2 is built on a computer vision system called CLIP, which is more complex than the word-matching system used by GPT-3 - the AI tool which, in its former version, had been infamously deemed “too dangerous to release” because of its ability to create text that is seemingly indistinguishable from those written by humans.

Word-matching, however, did not capture the qualities that humans think are most vital and limited how realistic the images could be. While CLIP looked at images and summarized their contents, the tool used here begins at the description and works towards the image, which says OpenAI research scientist Prafulla Dhariwal told The Verge is like starting with a “bag of dots” and then filling in a pattern with greater and greater detail.

There are also some built-in safeguards for the kind of images, which are watermarked, that can be generated. The model was trained on data that had ”objectionable content” filtered out, and will not generate recognisable faces based on someone’s name.

People testing Dall-e 2 are also banned from uploading or generating images that are not suitable for general audiences or could cause harm, which includes hate symbols, nudity, obscenity, and conspiracy theories.

First play with #dalle2 - "an ancient roman laptop" pic.twitter.com/9PxoBJlILZ

— Infinite Vibes (@Infinite__Vibes) May 31, 2022

What are the concerns?

The concerns around Dall-e 2, and its subsequent iterations, are the same that have been spoken about with other technology like deepfakes and artificially-intelligent voice creation: it could help spread disinformation across the internet.

“You could use it for good things, but certainly you could use it for all sorts of other crazy, worrying applications, and that includes deep fakes,” like misleading photos and videos, said Subbarao Kambhampati, a professor of computer science at Arizona State University, told the New York Times.

Dalle-2 has a summary of the risks and limitations of the technology on the Github site hosting its preview. “Without sufficient guardrails, models like DALL·E 2 could be used to generate a wide range of deceptive and otherwise harmful content, and could affect how people perceive the authenticity of content more generally. DALL·E 2 additionally inherits various biases from its training data, and its outputs sometimes reinforce societal stereotypes”, the developers write.

Similarly, Google has put out a lengthy statement describing the ethical challenges in text-to image research, referencing the same ethnic biases that artificial intelligence has exhibited – based on racist data sets – in other areas such as police work.

“While a subset of our training data was filtered to removed noise and undesirable content, such as pornographic imagery and toxic language, we also utilized LAION-400M dataset which is known to contain a wide range of inappropriate content including pornographic imagery, racist slurs, and harmful social stereotypes”, Google wrote.

“As such, there is a risk that Imagen has encoded harmful stereotypes and representations, which guides our decision to not release Imagen for public use without further safeguards in place”.