Discord servers used in child abductions, crime rings, sextortion

Discord launched in 2015 and quickly emerged as a hub for online gamers, growing through the pandemic to become a destination for communities devoted to topics as varied as crypto trading, YouTube gossip and K-pop. It’s now used by 150 million people worldwide.

But the app has a darker side. In hidden communities and chat rooms, adults have used the platform to groom children before abducting them, trade child sexual exploitation material (CSAM) and extort minors whom they trick into sending nude images.

In a review of international, national and local criminal complaints, news articles and law enforcement communications published since Discord was founded, NBC News identified 35 cases over the past six years in which adults were prosecuted on charges of kidnapping, grooming or sexual assault that allegedly involved communications on Discord. Twenty-two of those cases occurred during or after the Covid pandemic. At least 15 of the prosecutions have resulted in guilty pleas or verdicts, and many of the other cases are still pending.

Those numbers only represent cases that were reported, investigated and prosecuted — which all present major hurdles for victims and their advocates.

“What we see is only the tip of the iceberg,” said Stephen Sauer, the director of the tipline at the Canadian Centre for Child Protection (C3P).

The cases are varied. In March, a teen was taken across state lines, raped and found locked in a backyard shed, according to police, after she was groomed on Discord for months. In another, a 22-year-old man kidnapped a 12-year-old after meeting her in a video game and grooming her on Discord, according to prosecutors.

NBC News identified an additional 165 cases, including four crime rings, in which adults were prosecuted for transmitting or receiving CSAM via Discord or for allegedly using the platform to extort children into sending sexually graphic images of themselves, also known as sextortion. It is illegal to consume or create CSAM in nearly all jurisdictions across the world, and it violates Discord’s rules. At least 91 of the prosecutions have resulted in guilty pleas or verdicts, while many other cases are ongoing.

Discord isn’t the only tech platform dealing with the persistent problem of online child exploitation, according to numerous reports over the last year. But experts have suggested that Discord’s young user base, decentralized structure and multimedia communication tools, along with its recent growth in popularity, have made it a particularly attractive location for people looking to exploit children.

According to an analysis of reports made to the National Center for Missing & Exploited Children (NCMEC), reports of CSAM on Discord increased by 474% from 2021 to 2022.

When Discord responds and cooperates with tiplines and law enforcement, groups say the information is usually of high quality, including messages, account names and IP addresses.

But NCMEC said that the platform’s responsiveness to complaints has slowed from an average around three days in 2021, to nearly five days in 2022. And other tiplines have complained that Discord’s responsiveness can be unreliable.

John Shehan, the senior vice president of NCMEC, said his organization has seen “explosive growth” of child sexual abuse material and exploitation on Discord. NCMEC operates the United States’ government-supported tipline that receives complaints and reports about child sex abuse and associated activity online.

“There is a child exploitation issue on the platform. That’s undeniable,” Shehan said.

Discord has taken some steps to address child abuse and CSAM on its platform. The company said in a transparency report that it disabled 37,102 accounts for child safety violations in the last quarter of 2022.

In an interview, Discord’s vice president of trust and safety, John Redgrave, said he believes the platform’s approach to child safety has drastically improved since 2021, when Discord acquired his AI moderation company Sentropy.

The company said in a transparency report that the acquisition “will allow us to expand our ability to detect and remove bad content.”

Moderation on Discord is largely left up to volunteers in each Discord community.

Redgrave said that whenever the company deploys moderation efforts against CSAM, though, it tries to cast a wide net and search the platform as expansively as possible.

Discord was “not proactive at all when I first started,” Redgrave said. But since then, he said, Discord has implemented several systems to proactively detect known child sexual abuse material and analyze user behavior. Redgrave said he believes that the company now proactively detects most CSAM that’s been previously identified, verified and indexed. Discord is currently not able to automatically detect newly created CSAM that hasn't been indexed or messages that could provide signs of grooming.

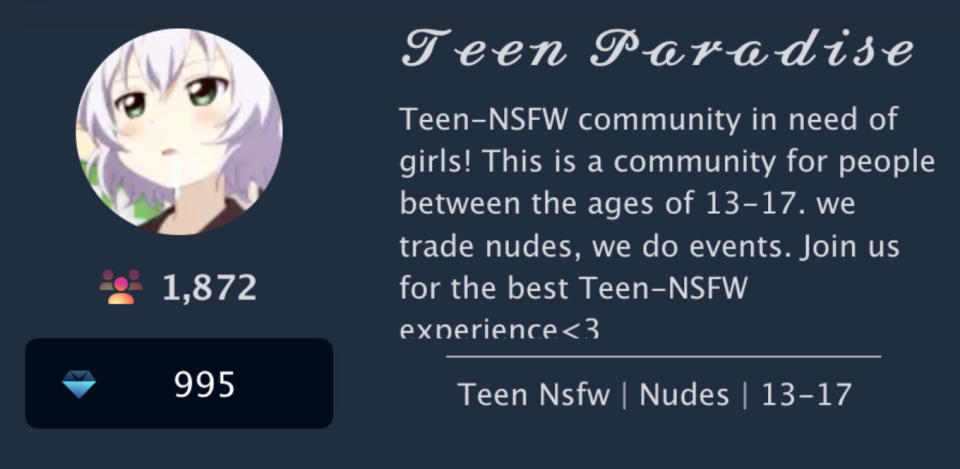

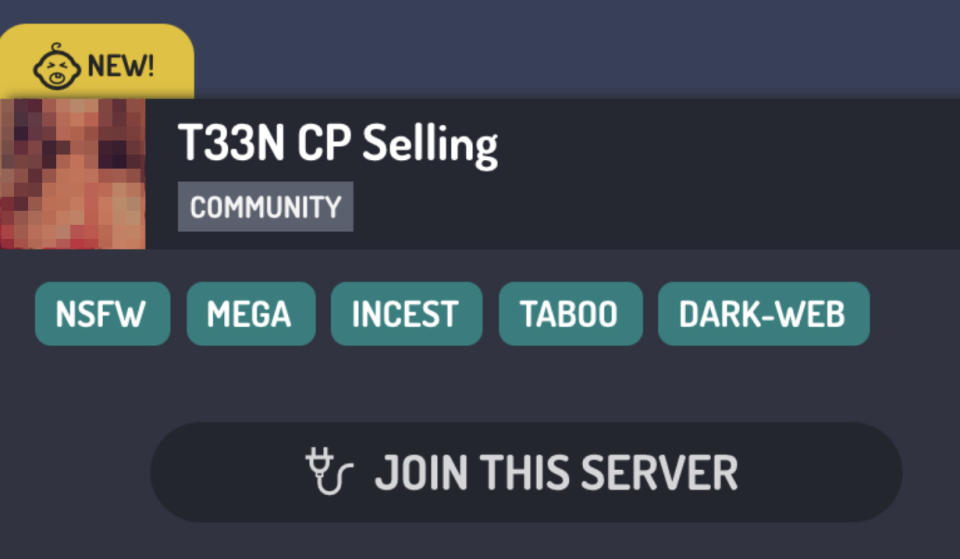

In a review of publicly listed Discord servers created in the last month, NBC News identified 242 that appeared to market sexually explicit content of minors, using thinly veiled terms like “CP” that refer to child sexual abuse material. At least 15 communities directly appealed to teens themselves by claiming they are sexual communities for minors. Some of these communities had over 1,500 members.

Discord allows for casual text, audio and video chat in invite-only communities, called servers (some servers are set to provide open invitations to anyone who wants to join). Discord doesn’t require users’ real identities like some other platforms, and can facilitate large group video and audio-chats. That infrastructure proved incredibly popular, and in the past seven years Discord has been integrated into nearly every corner of online life.

As the platform has grown, the problems it’s faced with child exploitation appear to have grown, too.

While it’s hard to assess the full scope of the issue of child exploitation on Discord, organizations that track reports of abuse on tech platforms have identified themes that they’ve been able to distill from the thousands of Discord-related reports they process each year: grooming, the creation of child exploitation material, and the encouragement of self-harm.

According to both NCMEC and C3P, reports of enticement, luring and grooming, where adults are communicating directly with children, are increasing across the internet. Shehan said enticement reports made to NCMEC had nearly doubled from 2021 to 2022.

Sauer, the C3P tipline director, said that the group has seen an increase in reports of luring children that involve Discord, which may be attractive to people looking to exploit children given its high concentration of young people and closed-off environment.

Sauer said predators will sometimes connect with children on other platforms, like Roblox or Minecraft, and move them to Discord so that they can have direct, private communication.

“They will often set up an individual server that really has no moderation at all to connect with a youth individual,” Sauer said.

For many families, grooming that’s said to have occurred on Discord has turned into a real-life nightmare, resulting in the abduction of dozens of children.

In April, NBC News reported on a Utah family whose 13-year-old child was abducted, taken across state lines and, according to prosecutors, sexually assaulted after he was groomed on Discord and Twitter.

In 2020, a 29-year-old man advised a 12-year-old Ohio girl he met on Discord on how to kill her parents, according to charging documents, which say that the man told the girl in a Discord chat that he would pick her up after she killed them, at which point she could be his “slave.” Prosecutors say she attempted to burn down her house. The same man encouraged another minor, a 17-year-old, to cut herself and send him sexually explicit photos and videos, prosecutors said. Prosecutors said that the man admitted to sexually exploiting the minors. The man pleaded guilty and was sentenced to 27 years in prison.

These cases illustrate another disturbing theme that watchdogs say they’re seeing emerge on Discord — threats of violence toward children and the encouragement of self-harm.

An NCMEC report shared with NBC News found that Discord “has had a huge issue over the past two years with an apparent organized group of offenders who have sextorted numerous child victims to produce increasingly egregious CSAM, engage in self-harm, and torture animals/pets they have access to.”

On dark web forums used by child predators, users share tips about how to effectively deceive children on Discord. “Try discord play the role of a generic edgy 15 year old and join servers,” one person wrote in a chat seen by NBC News. “I got 400 videos and 1000+ pictures.”

The tactics described in the post align with child sex abuse rings on Discord that have been busted by U.S. federal authorities over the last several years. Prosecutors have described rings with organized roles, including “hunters” who located young girls and invited them into a Discord server, “talkers” who were responsible for chatting with the girls and enticing them, and “loopers” who streamed previously recorded sexual content and posed as minors to encourage the real children to engage in sexual activity.

Shehan said his organization frequently gets reports from other tech platforms mentioning users and traffic from Discord, which he says is a sign that the platform has become a hub for illicit activity.

Redgrave said, “My heart goes out to the families who have been impacted by these kidnappings,” and added that oftentimes grooming occurs across numerous platforms.

Redgrave said Discord is working with Thorn, a well-known developer of anti-child-exploitation technology, on models that can potentially detect grooming behavior. A Thorn representative described the project as potentially helping “any platform with an upload button to detect, review, and report CSAM and other forms of child sexual abuse on the internet.” Currently, platforms are effectively able to detect images and videos already identified as CSAM, but they struggle with detecting new content or long-term grooming behaviors.

Despite the problem having existed for years, NBC News, in collaboration with researcher Matt Richardson of the U.S.-based nonprofit Anti-Human Trafficking Intelligence Initiative, was easily able to locate existing servers that showed clear signs of being used for child exploitation.

On websites that are dedicated to listing Discord servers, people promoted their servers using variations of words with the initials “CP,” short for child pornography, like “cheese-pizza” and “casual politics.” The descriptions of the groups were often more explicit, advertising the sale of “t33n” or teenage content. Discord does not run these websites.

In response to NBC News' questions about the Discord servers, Redgrave said the company was trying to educate the third-party websites that promote them about measures they could take that could protect children.

In several servers, groups explicitly solicited minors to join “not safe for work” communities. One server promoted itself on a public server database, saying: “This is a community for people between the ages of 13-17. We trade nudes, we do events. Join us for the best Teen-NSFW experience <3.”

The groups appear to fit into the description of child sex abuse material production groups described by prosecutors, where adults pretend to be teens to entice real children into sharing nude images.

Inside one of the groups, users directly solicited nude images from minors to gain access. “YOU NEED TO SEND A VERIFICATION PHOTO TO THE OWNER! IT HAS TO BE NUDE,” one user wrote in the group. “WE ACCEPT ONLY BETWEEN 12 AND 17 YEARS OLD.”

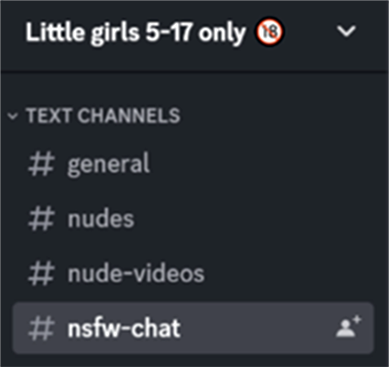

In another group, which specifically claimed to accept “little girls 5-17 only,” chat channels included the titles “begging-to-have-sex-chat,” “sexting-chat-with-the-server-owner,” “nude-videos,” and “nudes.”

Richardson said that he is working with law enforcement to investigate one group on Discord with hundreds of members who openly celebrate the extortion of children whom they say they’ve tricked into sharing sexual images, including images of self-harm and videos they say they’ve made children record.

NBC News did not enter the channels, and child sexual abuse material was not viewed in the reporting of this article.

Discord markets itself to kids and teens, advertising its functionality for school clubs on their homepage. But many of the other users on the platform are adults. The two age groups are allowed to freely mix. Some other platforms, such as Instagram, have instituted restrictions on how people under 18 can interact with people over 18.

The platform allows anyone to make an account and, like some other platforms, only asks for an email address and a birthday. Discord’s policies say U.S. users cannot join unless they’re at least 13 years old, but has no system to verify a user’s self-reported age. Age verification has become a hot-button issue in the world of social media policy, as legislation on the topic is being considered around the U.S. Some major platforms such as Meta have attempted to institute their own methods of age-verification, and Discord is not the only platform that has yet to institute an age-verification system.

Redgrave said the company is “very actively investigating, and will invest in, age assurance technologies.”

Children under 13 are frequently able to make accounts on the app, according to the court filings reviewed by NBC News.

Despite the closed-off nature of the platform and the milieu of ages mixing on it, the platform has been transparent about its lack of oversight of the activities that occur on it.

“We do not monitor every server or every conversation,” the platform said on a page in its safety center. Instead, the platform says it mostly waits for community members to flag issues. “When we are alerted to an issue, we investigate the behavior at issue and take action.”

Redgrave said the company is testing out models that analyze server and user metadata in an effort to detect child safety threats.

Redgrave said the company plans to debut new models that will try to locate undiscovered trends in child exploitation content later this year.

Despite Discord’s efforts to address child exploitation and CSAM on its platform, numerous watchdogs and officials said more could be done.

Denton Howard, executive director of Inhope, an organization of missing and exploited children hotlines from around the world, said that Discord’s issues stem from a lack of foresight, similar to other companies.

“Safety by design should be included from Day One, not from Day 100,” he said.

Howard said Discord had approached Inhope and proposed being a donating partner to the organization. After a risk assessment and consultation with the members of the group, Inhope declined the offer and pointed out areas of improvement for Discord, which included slow response times to reports, communications issues when hotlines tried to reach out, hotlines receiving account warnings when they try to report CSAM, the continued hosting of communities that trade and create CSAM, and evidence disappearing before hearing back from Discord.

In an email from Discord to Inhope viewed by NBC News, Discord the company said it was updating its policies around child safety and are working to implement “THORN’s grooming classifier.”

Howard said Discord had made progress since that March exchange, reinstituting the program that prioritizes reports from hotlines, but that Inhope had still not taken the company’s donation.

Redgrave said that he believes Inhope’s recommended improvements aligned with changes the company had long hoped to eventually implement.

Shehan noted that NCMEC had also had difficulties working with Discord, saying that the organization rescinded an invitation to Discord to become part of its Cybertipline Roundtable discussion, which includes law enforcement and top tipline reporters after the company failed to “identify a senior child safety representative to attend.”

“It was really questionable, their commitment to what they’re doing on the child exploitation front,” Shehan said.

Detective Staff Sgt. Tim Brown of Canada’s Ontario Provincial Police said that he’d similarly been met with a vexing response from the platform recently that made him question the company’s practices around child safety. In April, Brown said Discord asked his department for payment after he had asked the company to preserve records related to a suspected child sex abuse content ring.

Brown said that his department had identified a Discord server with 120 participants that was sharing child sexual abuse material. When police asked Discord to preserve the records for their investigation, Discord suggested that it would cost an unspecified amount of money.

In an email from Discord to the Ontario police viewed by NBC News, Discord’s legal team wrote: “The number of accounts you’ve requested on your preservation is too voluminous to be readily accessible to Discord. It would be overly burdensome to search for or retrieve data that might exist. If you pursue having Discord search for and produce this information, first we will need to discuss being reimbursed for the costs associated with the search and production of over 20 identifiers.”

Brown said he had never seen another social media company ask to be reimbursed for maintaining potential evidence before.

Brown said that his department eventually submitted individual requests for each identifier, which were accepted by Discord without payment, but that the process took time away from other parts of the investigation.

“That certainly put a roadblock up,” he said.

Redgrave said that the company routinely asks law enforcement to narrow the scope of information requests but that the request for payment was an error: "This is not part of our response process."

This article was originally published on NBCNews.com