Ebersole: Concerns about AI and a seemingly reckless rate of adoption

Artificial Intelligence (AI) is undoubtedly one of the most exciting and rapidly developing fields of technology. From self-driving cars to voice-activated personal assistants, AI is transforming the way we live and work. However, while the benefits of AI are clear, there is also a potential danger that we need to be aware of: the uncritical adoption of AI technology. AI algorithms are designed to learn and adapt to new data inputs, but they are only as good as the data they are trained on. If this data is biased, incomplete or inaccurate, the AI system will also be flawed. This can lead to serious consequences, especially in areas such as healthcare, finance, and criminal justice where AI is increasingly being used to make decisions that can have a significant impact on people's lives.

Now for a little test. What do the following things have in common? 1) the digital art composition "Théâtre D’opéra Spatial" that won first prize at the Colorado State Fair last year, 2) the Republican Party’s video response to President Biden’s reelection bid announcement, and 3) the first paragraph of this essay. If you said they were all created by Artificial Intelligence (AI) give yourself an A+.

I could have added another item to the list above: a term paper that was submitted by a student enrolled in a college class that I taught this semester. When I assigned the paper I anticipated that some students would be tempted to use AI (more specifically a very popular program called Chat GPT). So I gave a little talk about the potential risks associated with this relatively new technology. One of my students decided to ignore my advice, or perhaps he was testing my AI detection skills. As I read his paper it was abundantly clear that AI is good, but also somewhat predictable in the ways that it falls short. But that won't last long. AI experts are amazed at how quickly the learning curve is transforming into a vector headed one way…straight up.

As a student of media technology, I have long been fascinated with the role that technology plays in our lives. Every technology comes with unanticipated effects and we would be wise to consider these earlier rather than later. If we ignore these questions we may find that ethical and moral dilemmas have become baked into the cake. The development of nuclear power and nuclear weapons is a classic example of a technology that immediately made clear the need for thoughtful development and deployment. I think the jury is still out on whether we made responsible choices in that arena.

A long-standing debate surrounding technological developments attempts to answer questions about the locus of ethical and moral concerns. Those who believe in the neutrality of technology argue that the intent and actions of the user of a given technology is all that matters. A knife, for instance, can be used to cook, cure, or kill depending on whether it is wielded by a chef, doctor, or murderer. Those who subscribe to some version of technological determinism argue that technology is not passive but is imbued with characteristics that favors certain outcomes and consequences. In case you’re still wondering where I stand, count me among those who believe that AI is not only incredibly powerful, but that its power to transform our lives contains a multitude of dangerous possibilities…and I’m not just talking about short-changing a homework assignment. I would also note that I’m not alone. In addition to modern philosophers and theorists such as Ellul, McLuhan, and Postman, post-modern AI experts are having serious reservations about the rapid deployment of a technology that few understand and even fewer can predict. Recently hundreds of prominent AI experts, entrepreneurs, and scientists called for a pause in AI research to allow time to try to sort out some of the potential risks.

What is at stake? Personal privacy, national security, economic stability, mental health, and democracy are just a few of the concerns being raised. According to some experts, 2024 will be the last human presidential election in the US. Not that we’re going to elect an algorithm in 2028, but rather that future elections will be determined by which party or candidate can marshal the greatest computer resources and algorithmic tools. Messages will be created and tested by AI and then distributed to curated lists of potential voters who are most likely to be manipulated by these carefully constructed pitches. Yesteryear’s campaign managers armed with advertising strategies and mailing lists will look like a Ford Model T next to this Tesla.

The increasing quality and accessibility of deep-fakes generated by AI will continue to erode trust and promote division by class, race, religion, and ideology. New AI-assisted voice replicators can produce nearly perfect copies with only three seconds of recorded audio as input data. When we can no longer trust our own eyes and ears, everything becomes suspect and truth evaporates into thin air. In a video posted to YouTube, Tristan Harris and Aza Raskin discuss some of the risks associated with the rapid deployment of AI. But the part of their presentation that was most concerning to me is that experts do not even know how these AI models are teaching themselves and developing new capabilities. Yes, you read that correctly; the AI experts don’t understand how it is happening. According to Raskin, the old proverb is going to have to be revised. “Give a man a fish and you feed him for a day. Teach a man to fish and you will feed him for a lifetime. But teach AI to fish and it will teach itself biology, oceanography, chemistry, evolutionary theory…and then fish all the fish to extinction.”

I’d like to end on a positive note, but to be honest I’m struggling to come up with anything. I suppose we can look on the bright side and acknowledge that humans have had nuclear weapons for more than 75 years and we’re still here. There’s that.

To mitigate the risks of uncritical adoption of AI technology, it is essential that we approach its development and deployment with caution. This means ensuring that AI algorithms are transparent, accountable, and designed to be inclusive and non-discriminatory. It also means investing in research and development to improve the accuracy and reliability of AI systems, as well as investing in education and training to prepare workers for the changing job market.

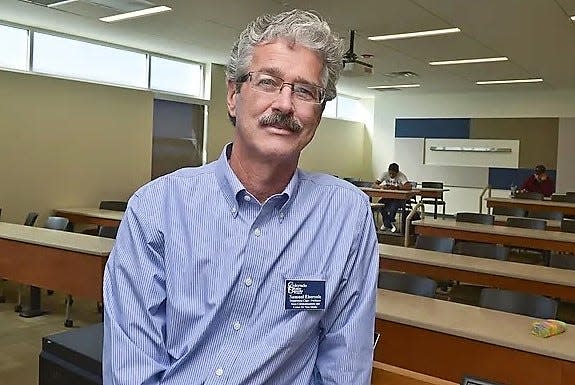

Samuel Ebersole, PhD is professor emeritus at CSU Pueblo where he taught media communication. In the interest of transparency, both the opening and closing paragraphs of the essay were written entirely by Chat GPT.

This article originally appeared on The Pueblo Chieftain: Ebersole: Concerns about AI and a seemingly reckless rate of adoption