Guests: How ChatGPT has changed how academics write, research

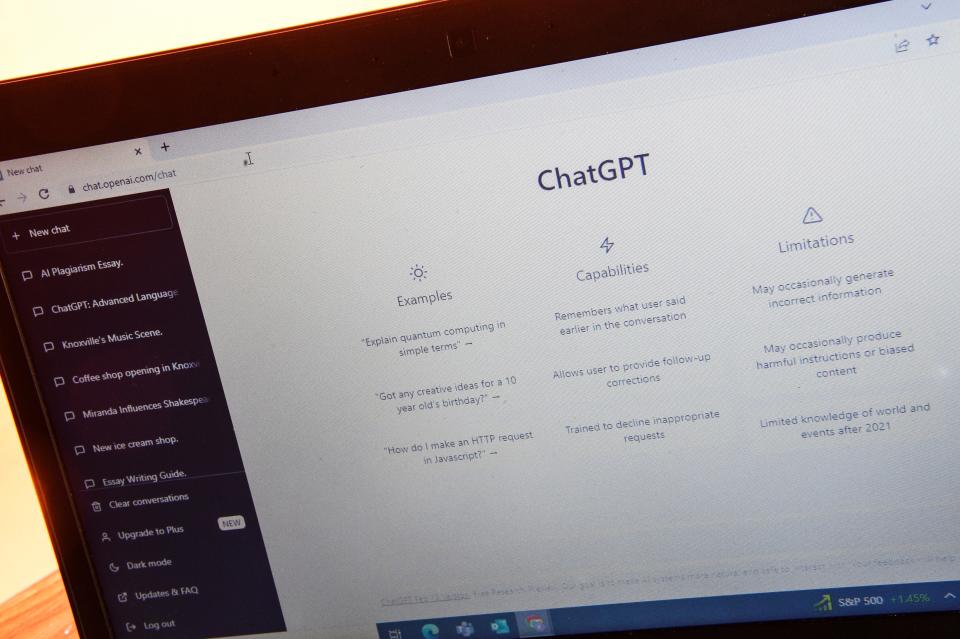

ChatGPT has captivated the public, and even knowledge workers are questioning their role in the workforce. Naturally, this technology also introduces ethical dilemmas for scientists whose primary medium for creating value is academic journal writing. Indeed, tools such as ChatGPT prompt the question: How should researchers use Artificial Intelligence (AI) in academic writing?

To answer this question, we take a casuistry approach. Casuistry is a philosophical process to resolve ethical conundrums by exploring how a novel situation parallels a well-known case. To understand AI and its role in writing, we compare it to (a) plagiarism and (b) copy editing.

Plagiarism, or taking credit for something one did not write, is widely considered inappropriate. This consensus exists because it is seen as a form of fraud or stealing primarily because one takes credit for something they did not create. Indeed, the word plagiarism comes from the Latin word “plagiarius,” which can be translated to “kidnapper.” Thus, plagiarism is the abduction of another’s ideas and words in writing. Not surprisingly, there is little debate in academic circles that taking a human’s written words and claiming them as your own is unethical.

More:Guest: ChatGPT should challenge today's writers to be bold, forward-thinking

Thus, casuistry would suggest that unaccredited AI-generated language would similarly be a breach of ethics. This would represent another form of taking credit for something you did not create. Of course, if people acknowledge that ChatGPT was involved, then this is no longer plagiarism.

We also compare ChatGPT to copy editing. In contrast, many researchers may ask for a “friendly review,” hire a copy editor or attend a writing workshop to edit what they have already written. In contrast to plagiarism, these practices are considered fitting and are often even encouraged. The key difference between copy editing and plagiarism is the focus on refinement from what has already been created. Indeed, technological advances that allow writers to create or refine their work should be fully embraced. Tools such as Microsoft Word, spell-check and Grammarly are all wonderful tools that have helped enhance scholars’ creating capabilities. However, these tools act to support the creator and do not serve as the source of creation.

Casuistry would indicate this would be an appropriate and acceptable practice when tools such as ChatGPT or other AI writing tools are applied to refine or improve what has already been created. For example, in the Harvard Business Review article on ChatGPT, the authors note, “yes, ChatGPT helped write what you just read. We wrote a draft and requested ChatGPT to ‘Re-write the following essay in a more interesting way.’ It did.”

ChatGPT has fundamentally shifted the landscape, and this includes the world of science and the production of research. As the world grapples with this new ethical dilemma, we encourage the simple application of casuistry, which can help us realize that this conundrum is not so new after all.

Michael Matthews is a Ph.D. Candidate at the University of Oklahoma.

Thomas Kelemen, who has a Ph.D. from the University of Oklahoma, is an assistant professor at Kansas State University.

This article originally appeared on Oklahoman: Guests: How ChatGPT has changed how academics write, research