Meta Made DALL-E for Video and There’s No Way This Ends Well

Over the summer, the art world was rocked after a “painting” created by an AI won a state fair art competition. While image generators have been around for decades now, they’ve mostly been treated as a novelty—a funny quirk of the internet for people to have fun and mess around with. But the AI art winning the competition crossed a kind of heretofore unseen Rubicon. When it did, it made something very clear to the world at large: the AI art wars have begun.

Between bots like Midjourney and DALL-E—not to mention Google’s Imagen—developers and engineers are ramping up their efforts to create the best and most sophisticated text-to-image generators.

Now Meta (Facebook’s parent company) has thrown its hat in the ring—but instead of a text-to-image bot that transforms a text prompt into a picture, they’ve created one that can turn your words into videos.

The company announced in a blog post last week that it had developed a text-to-video generator dubbed Make-A-Video. The AI-driven system seems to be Meta’s answer to DALL-E and Imagen—only instead of simply making a still image, it spits out full-blown videos.

Prompt: A dog wearing a Superhero outfit with red cape flying through the sky.

“Generative AI research is pushing creative expression forward by giving people tools to quickly and easily create new content,” Meta’s announcement read. “With just a few words or lines of text, Make-A-Video can bring imagination to life and create one-of-a-kind videos full of vivid colors, characters, and landscapes.”

In addition to text inputs, the bot “can also create videos from images or take existing videos and create new ones that are similar,” the company added. That means you can input pictures and videos that already exist, and it’ll spit out a new video based on the image.

While it’s not the first advanced text-to-image generator (researchers at Tsinghua University and the Beijing Academy of Artificial Intelligence unveiled a similar text-to-video generator dubbed CogVideo in May), Make-A-Video is notable when it comes to how it was trained—which is a big hurdle to overcome when it comes to bots making videos.

That’s because a typical text-to-image generator is typically trained on massive datasets composed of billions of images paired with alt-text descriptions of what the image is. These text-image pairs are what allows the AI to learn what image to form when you input a text.

Prompt: An artists brush painting on a canvas close up.

However, in a paper published by Meta’s AI researchers on September 29, the authors wrote that video generators lack that benefit. Such programs can only draw on data sets with a few million videos with accompanying captions (which is how CogVideo was trained).

To get around this and to utilize existing image datasets, the Meta researchers used text-to-image models to train their bot to recognize the connection “between text and the visual world.” Then they trained Make-A-Video on video datasets in order to teach it realistic motion.

So if you input something like “a horse drinking water,” it would be able to pull on its image training to figure out what a horse drinking water looks like. Then it would draw on its video training to understand that large four legged creatures near water troughs typically lap up the water with their mouths—and then make a video that does that.

Prompt: Horse drinking water.

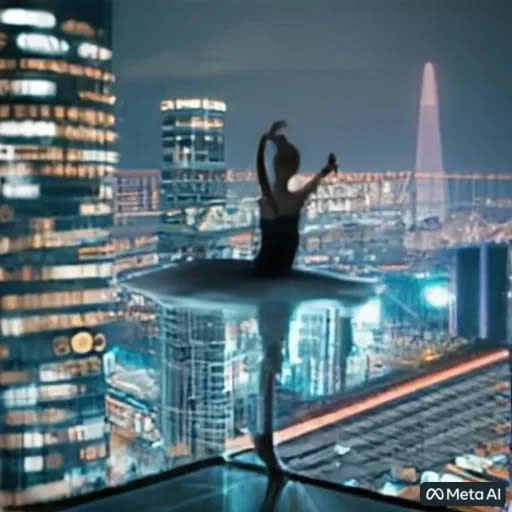

The results are astonishing—though with some clear limitations. While the generator can occasionally create realistic and impressive videos, they aren’t perfect. The subjects of some images can appear just as malformed and off-putting as the most rudimentary text-to-image generators. The videos aren’t long either—less than five seconds—so no feature-length movies yet. There’s also no sound. But it’s clear that Make-A-Video is a giant, transformative leap forward for AI and AI-based art nonetheless.

However, it’s this same dataset that created these videos will likely create a familiar thorn in the side of so many AI bots before it: bias. After all, these generators are only as good as the data it’s trained on. When you train it on biased data, you’re going to get biased results.

There is no end to the real-world examples of the harm biased AI has caused either—from racist chatbots, to mortgage lending algorithms that reject Black and Brown applicants, to even highly sophisticated image generators like DALL-E mimicking our biased tendencies.

Prompt: A ballerina performs a beautiful and difficult dance on the roof of a very tall skyscraper.

Meta’s Make-A-Video is no exception. Though the paper’s authors note that they attempted to filter NSFW content and toxic language out of its datasets, they concede that “our models have learnt and likely exaggerated social biases, including harmful ones.” They add that all of their data is publicly available, though, in an attempt to add a “layer of transparency” to the models.

So the bias that we’ve seen with text-to-image generators still exists—only now, they are happening with entire videos. In the age of deepfake videos and the proliferation of rampant misinformation, that’s a scary prospect. Though Make-A-Video is still only producing rudimentary videos, it’s easy to imagine the inevitability of the technology becoming so refined that the videos are indistinguishable from reality.

What Meta is doing shouldn’t be too much of a surprise to anyone who has been seeing the rise of bot-generated images in the past few years though. And it’s no coincidence that these companies are starting to invest more and more into these systems. With the exponential explosion in research and development of these computer models, we’ve seen text-to-image generators transform from malformed squint-and-you-sort-of-see-it pictures into full-blown works of art that leap from the realm of the uncanny straight into hyper-realism.

Prompt: A young couple walking in a heavy rain.

This flood of newer, more sophisticated bot-made works of art is fueling what has become a growing arms race in AI image generation—and it’s one that’s heating up in a big way. It’s no coincidence that the company-formerly-known-as-Facebook decided to announce the bot on the same day Google announced its text-to-3D-image generator DreamFusion.

While many are outraged about AI art winning competitions and being used for things like book covers, it’s really only just the beginning. If Make-A-Video lives up to its promise, we’re not that far away from seeing feature-length motion pictures being created entirely from AI complete with sound, characters, and a story. This isn’t hyperbole. It’s fate. You can’t put this knowledge back into a box.

Put it another way: We’ve come a long way from telling stories and making shadow puppets around a fire in a cave. Now, it’s only a matter of time before the fire that we created begins to cast its own shadows against the cave walls.

Got a tip? Send it to The Daily Beast here

Get the Daily Beast's biggest scoops and scandals delivered right to your inbox. Sign up now.

Stay informed and gain unlimited access to the Daily Beast's unmatched reporting. Subscribe now.