How Neural Networks Can See What We're Doing Through Walls

A new technique developed by MIT researchers harnesses radio waves to help neural networks spot what someone is doing through a wall.

Researchers trained a neural network to recognize people's activity patterns by inputting films of their actions, shot both in visible-light and radio waves.

Don’t worry: The low-res tech isn’t able to identify people.

Humans can spot patterns of activity, but we can’t see through walls. Advanced neural networks that use radio wave imaging to see have the exact opposite problem. Now, a new technique developed by researchers at Massachusetts Institute of Technology is helping the neural networks see the world a little more clearly.

The new method uses radio waves to train a neural network to spot patterns of activity that can’t be viewed in visible light, according to a paper, titled “Making the Invisible Visible: Action Recognition Through Walls and Occlusions,” recently posted to the preprint server arXiv. The researchers say the tech is especially helpful in difficult conditions, such as when someone is obscured in darkness or fog or around a corner.

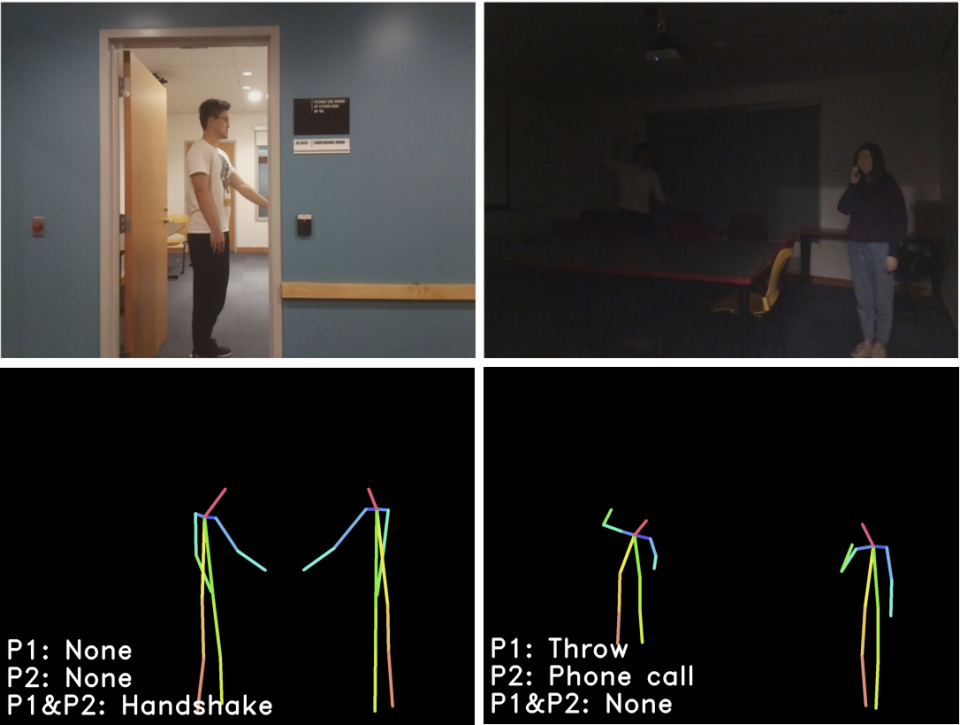

“Our model takes radio frequency (RF) signals as input, generates 3D human skeletons as an intermediate representation, and recognizes actions and interactions of multiple people over time,” the MIT researchers write in the paper.

The scientists used visual-light imagery to train a radio wave vision system they created. By recording the same video in both visible light and radio waves, the researchers can sync the videos and train a neural network, which can be coached to recognize human activity in visible light, to spot the same activity when it's picked up using radio waves.

The catch? It takes time for the system to learn to differentiate a person from their surroundings. To ameliorate this issue, the team created an additional set of training data videos composed of 3D stick figure models that replicated the humans in the film and fed those to the neural network, too.

Using this imagery as a training set, the neural network was able to track the actions of hidden people in both visible light and using radio waves.

There’s no reason to be concerned about privacy. Yet. At the moment, the technology is low resolution, so it can’t identify people by their faces. In fact, it’s been proposed as a more secure alternative to visible light cameras, which can easily pick up a number of details.

Source: MIT Technology Review

You Might Also Like