QAnon spread like wildfire on social media. Here's how 16 tech companies have reacted to the conspiracy theory.

Social-media platforms have been slow to act against the QAnon conspiracy theory, with many only acting to curb the ballooning movement in the fall of 2020.

QAnon followers have been linked to several alleged crimes, including killings and attempted kidnappings.

The conspiracy-theory movement also played a major role in the US Capitol siege on Wednesday.

Tech companies, including Twitter, have adjusted their stances on QAnon in the wake of the deadly riot.

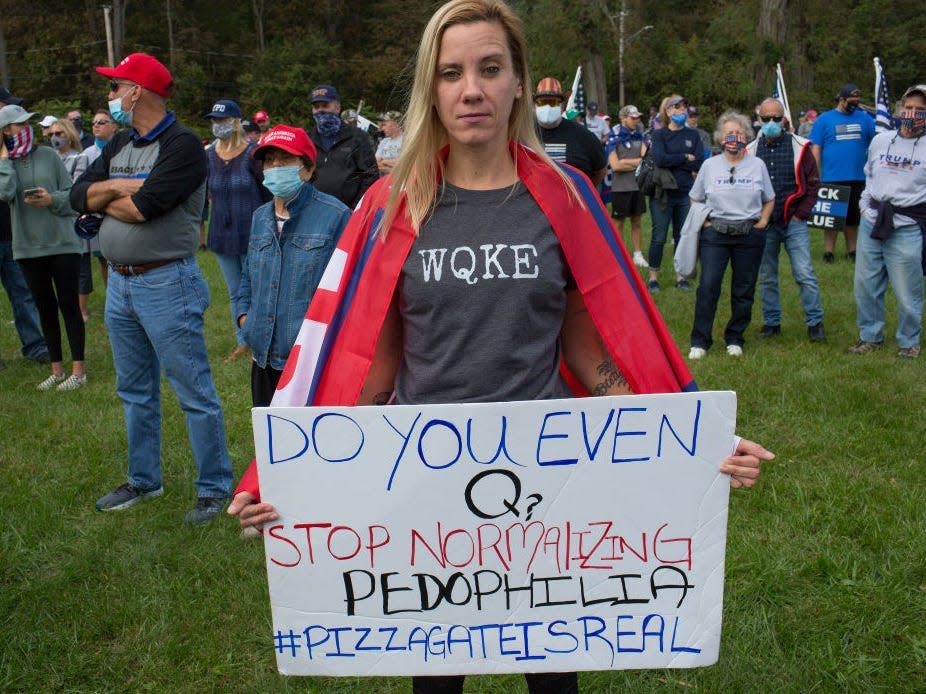

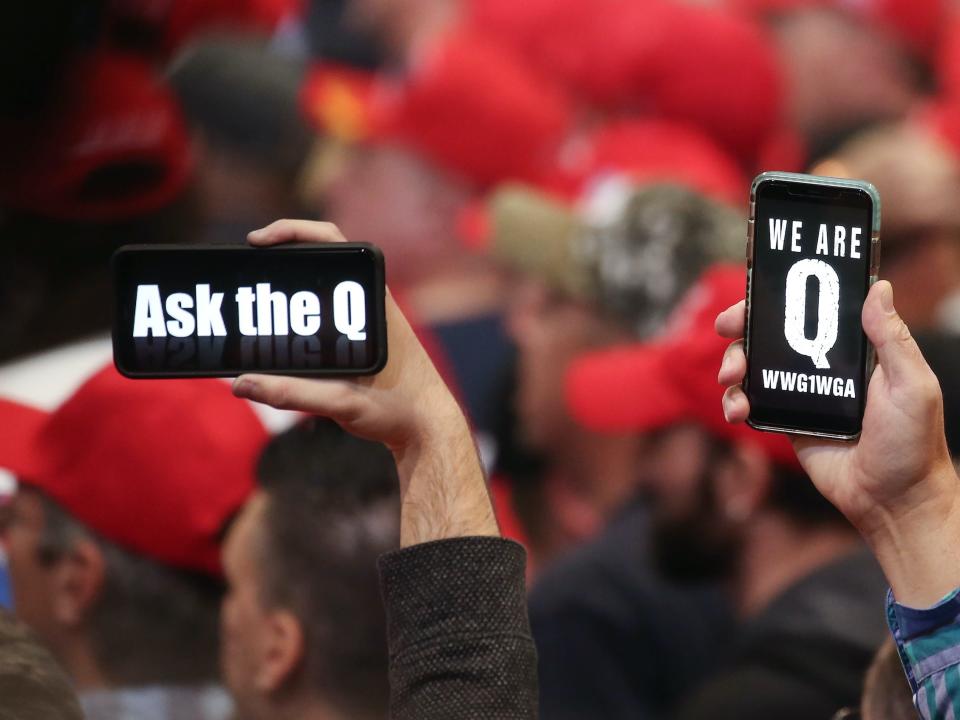

The QAnon conspiracy theory was born on the internet, and while it's spread to real-life rallies in the US and abroad, it's continued to grow online. And yet social-media platforms, where the conspiracy theory has gained a foothold and radicalized people, have been generally slow to ban it.

The conspiracy-theory movement made news again in January, when many of its followers were seen participating in the deadly riot in the US Capitol. The insurrection was fueled by voter-fraud conspiracy theories popularized by the President Donald Trump himself, but QAnon influencers, among other far-right figures, helped spread the theories.

QAnon is a baseless far-right conspiracy theory that claims President Donald Trump is fighting a deep-state cabal of elite figures who are involved with human trafficking. It is unfounded, and yet its followers - estimated to be in the millions - have reportedly been linked to several alleged crimes, including killings and attempted kidnappings. In 2019, a bulletin from the FBI field office in Phoenix warned that the conspiracy theory movement could become a domestic terrorism threat.

Here's how major tech companies have handled the spread of the QAnon conspiracy theory online.

This article was updated to include how companies adjusted their policies in the wake of the Capitol siege, which was linked to QAnon.

Facebook said its companies are cracking down on QAnon.

On October 6, Facebook announced it would remove all pages, groups, and Instagram accounts that promoted QAnon.

The ban, which the company said would be enacted gradually, comes after the platform previously announced over the summer that it had removed 790 QAnon Facebook groups.

Extremism researchers are tracking how the new ban will play out, as the movement has spread rapidly on Facebook and on Instagram, where many are using "Save the Children" rhetoric to further propagate the movement's misguided focus on human trafficking conspiracy theories.

Facebook has been criticized for its slowness in acting against QAnon.

Twitter announced a moderation plan on QAnon in July. After the Capitol riot, the platform said it removed 70,000 QAnon-associated accounts.

The New York Times reported on Monday that Twitter had removed more than 70,000 accounts that had recently promoted QAnon.

"These accounts were engaged in sharing harmful QAnon-associated content at scale and were primarily dedicated to the propagation of this conspiracy theory across the service," Twitter said in a blog post.

Twitter announced its first sweeping ban on QAnon in July, suspending accounts that were "engaged in violations of our multi-account policy, coordinating abuse around individual victims, or are attempting to evade a previous suspension."

The platform said it would also stop recommending QAnon-related accounts and trends and block URLs associated with QAnon from being shared on Twitter.

In the past, critics have said the platform was slow to act on the movement and hasn't moderated the community enough. On October 3, The Washington Post reported that there were still 93,000 active Twitter accounts referencing QAnon in their profiles, citing research from Advance Democracy, a nonpartisan research group.

YouTube announced a crackdown on QAnon after significant pressure, but stopped short of an explicit ban.

YouTube, where videos that spread the conspiracy theory have thrived and gained millions of views, said in October that it would prohibit QAnon content that threatens violence against a group or individual.

The move is part of an addition to the company's policies against hate and harassment that is focused on "conspiracy theories that have been used to justify real-world violence."

"We will begin enforcing this updated policy today, and will ramp up in the weeks to come," the company said. "Due to the evolving nature and shifting tactics of groups promoting these conspiracy theories, we'll continue to adapt our policies to stay current and remain committed to taking the steps needed to live up to this responsibility."

The announcement, which stopped short of a ban, brought the removal of two top QAnon accounts from the platform, NBC News reported.

YouTube CEO Susan Wojcicki had previously told CNN that the platform had not made a policy banning the conspiracy theory movement. "We're looking very closely at QAnon," Wojcicki said. "We already implemented a large number of different policies that have helped to maintain that in a responsible way."

YouTube has faced criticism for the way its algorithm can send users down rabbit holes of extremism and radicalization, often recommending QAnon-related videos and other videos containing misinformation.

TikTok has disabled popular QAnon hashtags and the platform said QAnon violates their guidelines.

TikTok announced in July that it disabled the hashtag pages for "QAnon" and "WWG1WGA" in an effort to curb the theory's spread.

Both hashtags had amassed tens of millions of views on the app before they were banned, Insider reported.

A representative for TikTok told Insider in July that QAnon content in general violated the app's guidelines and QAnon-related videos and accounts would be removed.

On October 21, the platform rolled out a stricter plan to combat hate speech and misinformation. "As part of our efforts to prevent hateful ideologies from taking root, we will stem the spread of coded language and symbols that can normalise hateful speech and behaviour," the platform said in a blog post.

Triller said it would ban QAnon after it defended the conspiracy theory's presence on the app.

Triller, the TikTok rival app that previously hosted a slew of QAnon-related content, told Business Insider on Tuesday that the platform banned QAnon. Hashtag pages for "QAnon" and "QAnonBeliever" were unsearchable as of Tuesday, but "WWG1WGA" remained available as a hashtag page.

The company said its recent decision was based on the FBI's 2019 reference to QAnon as a possible domestic terrorism threat.

"We are a platform that believes in freedom of speech, expression, open discussion and freedom of opinion, however when the government classifies something as a terrorist threat we must take action to protect our community," CEO Mike Lu said in a statement.

The announcement was in contrast with what Triller majority owner Ryan Kavanaugh told The New York Times just one week before. Kavanaugh said Triller did not intend to moderate QAnon, saying that "if it's not illegal, if it's not unethical, it doesn't harm a group, and it's not against our terms of service, we're not going to filter or ban it."

Etsy, which previously hosted QAnon merchandise from third-party sellers, banned QAnon products.

An Etsy spokesperson told Insider on October 7 that the company was "in the process of removing QAnon merchandise" from the platform.

"Etsy is firmly committed to the safety of our marketplace and fostering an inclusive environment. Our seller policies prohibit items that promote hate, incite violence, or promote or endorse harmful misinformation. In accordance with these policies, we are removing items related to 'QAnon' from our marketplace," the spokesperson said in a statement.

Etsy had been a hotbed for QAnon merchandise, including stickers, hats, apparel, and other items sold by third-party sellers on the retail marketplace.

After months of refusing to take a stance on QAnon, Amazon announced a ban on products associated with the movement.

The New York Times reported on Monday that Amazon would remove products associated with QAnon from its website. The decision came after Amazon Web Services cut ties with Parler, a platform that's become hugely popular on the far right.

Insider reported in October that Amazon hosted more than 1,000 QAnon-related items for sale from third-party sellers. At the time the Amazon QAnon product landscape resembles YouTube's infamous related video recommendation algorithm. A search for "QAnon" on Amazon offers related search terms including "qanon shirt," "qanon flag," "qanon hat" and "qanon stickers and decals."

When asked if the platform would remove these products, which include more than a dozen books filled with misinformation about the theory, an Amazon spokesperson declined to comment.

Fitness company Peloton removed tags related to QAnon.

Peloton, the at-home fitness company, has had to moderate its platform for QAnon tags. On Peloton, users can follow tags to see who else in that interest group is in a ride or workout class with them.

A Peloton spokesperson told Insider that the platform had removed QAnon-related tags in accordance with policies against "hateful content."

"We actively moderate our channels and remove anything that violates our policy or does not reflect our company's values of inclusiveness and unity or maintain a respectful environment," the spokesperson said.

The Verge reported that following the Capitol siege, the company also said it was banning the "StopTheSteal" hashtag, which references the pro-Trump movement alleging voter fraud in the election that led to the riot.

Pinterest says it banned QAnon in 2018 and actively seeks out content related to the conspiracy theory to remove.

A Pinterest spokesperson told Insider that the platform actually began prohibiting QAnon content in 2018, which is quite early in the conspiracy theory's lifespan, but didn't make an announcement on it.

"We believe in a more inspired internet, and that means being deliberate about creating a safe and positive space for Pinners. Pinterest is not a place for QAnon conspiracy theories or other harmful and misleading content," the spokesperson said.

In addition to seeking QAnon content to remove, the platform disabled search terms for "QAnon" and "WWG1WGA."

Reddit has emerged as one of the few online communities largely devoid of QAnon.

The Atlantic's Kaitlyn Tiffany reported in September that Reddit had successfully rid its platform of most QAnon content, but company leadership wasn't quite sure how.

Chris Slowe, Reddit's chief technology officer, told The Atlantic, "I don't think we've had any focused effort to keep QAnon off the platform."

While QAnon had a big presence on Reddit in its early days, the platform was quick to ban subreddits associated with the theory as early as 2018. "Reddit has plenty of problems, but QAnon isn't one of them," Tiffany reported.

Twitch has managed to keep QAnon content to a minimum with partial suspensions for some far-right accounts.

As Kotaku reported in September, Twitch, the streaming platform popular in the gaming community, has managed to keep QAnon communications to a minimum.

The platform temporarily banned Patriots' Soapbox, a notorious QAnon-spreading YouTube channel, from Twitch in September. The channel only has a few thousand followers on Twitch, where it's still active as of Thursday. YouTube removed the group's main channel as part of its new crackdown, though a second version of the channel was still up at publishing time.

Twitch's functionality makes it less of a QAnon target, Nathan Grayson of Kotaku wrote, because there's no recommendation algorithm that leads viewers down rabbit holes.

The platform has not rolled out a specific policy on QAnon, but told Kotaku in September that "the safety of our community is our top priority, and we reserve the right to suspend any account for conduct that violates our rules, or that we determine to be inappropriate, harmful, or puts our community at risk."

A Twitch spokesperson told Insider that the platform's guidelines "prohibit hateful conduct, harassment, and threats of violence," and that Twitch evaluates "all accounts under the same criteria and take action when we have evidence that a user has violated our policies."

Discord said QAnon servers violate its regulations and are removed from the platform.

Discord, an instant messaging application, has not made a public statement on its QAnon moderation, but the platform has been suspected of taking down QAnon-related groups. The Discord chat room for listeners of the podcast QAnon Anonymous, which analyzes and debunks the conspiracy theory and its community, has been wrongfully removed from the platform in the past.

There have been several popular Discord chats for QAnon believers, including Patriots' Soapbox, but the platform appeared to have removed Patriots' Soapbox and other groups, according to tweets from members discussing the removal.

A Discord representative told Insider that "QAnon servers violate our community guidelines and we continue to identify and ban these servers and users."

Google banned QAnon merchandise from its shopping tab.

Google, which owns YouTube, blocked search results for people seeking QAnon-related merchandise on its "shopping" tab, The Telegraph reported in August.

"We do not allow ads or products that promote hatred, intolerance, discrimination or violence against others," a Google spokesperson told The Telegraph. Google also removed Parler from its app store, as did Apple, after the riot.

A Google representative did not immediately respond to Insider's request for comment regarding QAnon.

Spotify removed 4 podcasts related to the conspiracy theory.

After an October report from the progressive nonprofit Media Matters for America found QAnon influencers thriving on Spotify, among other platforms, the audio platform told Insider it removed the four podcast shows in question.

"Spotify prohibits content on the platform that promotes, advocates or incites violence against others," a company spokesperson said. "When content that violates this standard is identified it is removed from the platform."

The shows removed included the podcast from Praying Medic, one of the most influential voices in the QAnon movement.

Video-sharing platform Vimeo said it prohibits QAnon content.

A spokesperson for Vimeo, a video-sharing platform, confirmed to Insider that it does not allow QAnon content.

Vimeo removed a video used by QAnon belivers to recruit others after Media Matters for America, a progressive nonprofit that tracks media, reported it remained on the platform on October 16.

QAnon is banned from Vimeo as part of the company's guildeines that prohibit "conspiracy theory-related content where the underlying conspiracy theory spreads, among other things, hate speech, large scale defamation, false or misleading vaccination or health-safety content."

Patreon, the membership platform, said it will ban QAnon conspiracy theorists.

Patreon, a platform for creators to earn money from fans, said on October 22 that it was banning creators from spreading the QAnon conspiracy theory.

A recent Media Matters for America report, released two days before Patreon's announcement, found 14 QAnon influencers on the platform.

Patreon said that creators who have devoted their accounts to QAnon will be removed, but those "who have propagated some QAnon content, but are not dedicated to spreading QAnon disinformation, will have the opportunity to bring their campaigns into compliance with our updated guidelines."

In the blog post, the company cited other platforms' QAnon moderation plans as part of their decision.

Read the original article on Business Insider