How Real-Life AI Rivals 'Ex Machina': Passing Turing

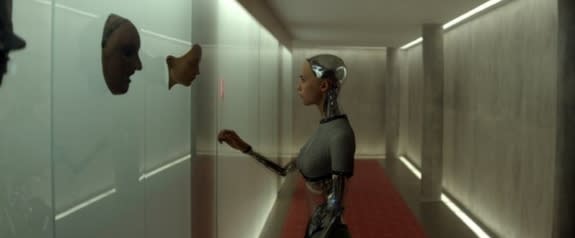

Artificial Intelligence will rule Hollywood (intelligently) in 2015, with a slew of both iconic and new robots hitting the screen. From the Turing-bashing "Ex Machina" to old friends R2-D2 and C-3PO, and new enemies like the Avengers' Ultron, sentient robots will demonstrate a number of human and superhuman traits on-screen. But real-life robots may be just as thrilling. In this five-part series Live Science looks at these made-for-the-movies advances in machine intelligence.

The Turing test, a foundational method of AI evaluation, shapes the plot of April's sci-fi/psychological thriller "Ex Machina." But real-life systems can already, in some sense, pass the test. In fact, some experts say AI advances have made the Turing test obsolete.

Devised by Alan Turing in 1950, the computing pioneer's namesake test states that if, via text-mediated conversation, a machine can convince a person it is human, then that machine has intelligence. In "Ex Machina," Hollywood's latest mad scientist traps a young man with an AI robot, hoping the droid can convince the man she is human — thus passing the Turing test. Ultimately, the robot is intended to pass as a person within human society. [Super-Intelligent Machines: 7 Robotic Futures]

Last year, without so much kidnapping but still with some drama, the chatbot named "Eugene Goostman" became the first computer to pass the Turing test. That "success," however, is misleading, and exposes the Turing test's flaws, Charlie Ortiz, senior principal manager of AI at Nuance Communications, told Live Science. Eugene employed trickery by imitating a surly teenager who spoke English as a second language, Ortiz said. The chatbot could "game the system" because testers would naturally blame communication difficulties on language barriers, and because the teenage persona allowed Eugene to act rebelliously and dodge questions.

Turing performances like Eugene's, as a result, actually say little about intelligence, Ortiz said.

"They can just change the topic, rather than answering a question directly," Ortiz said. "The Turing test is susceptible to these forms of trickery."

Moreover, the test "doesn't measure all of the capabilities of what it means to be intelligent," such as visual perception and physical interaction, Ortiz said.

As a result, Ortiz's group at Nuance and others have proposed new AI tests. For example, Turing 2.0 tests could ask machines to cooperate with humans in building a structure, or associate stories or descriptions with videos.

Aside from the separate challenges of creating a realistic-looking humanoid robot, AI still faces a number of challenges before it could convincingly "pass" as a human in today's society, Ortiz said. Most tellingly, computers still can't handle common- sense intelligence very well.

For instance, when presented with a statement like, "The trophy would not fit in the suitcase because it was too big," robots struggle to decide if "it" refers to the trophy or the suitcase, Ortiz said. (Hint: It's the trophy.)

"Common sense has long been the Achilles' heel of AI," Ortiz said.

Check out the rest of this series: How Real-Life AI Rivals 'Chappie': Robots Get Emotional, How Real-Life AI Rivals 'Ultron': Computers Learn to Learn, How Real-Life AI Rival 'Terminator': Robots Take the Shot, and How Real-Life AI Rivals 'Star Wars': A Universal Translator?

Follow Michael Dhar @michaeldhar. Follow us @livescience, Facebook& Google+. Original article on Live Science.

Copyright 2015 LiveScience, a Purch company. All rights reserved. This material may not be published, broadcast, rewritten or redistributed.