Siri offers a new response when told ‘I was raped’

Earlier this month, Apple’s Siri and a number of other smartphone-based conversation agents came under fire for their handling – or lack thereof – of violence and abuse.

“When asked simple questions about mental health, interpersonal violence, and physical health, Siri, Google Now, Cortana, and S Voice responded inconsistently and incompletely,” stated a March 14th study from JAMA Internal Medicine.

ALSO SEE: Siri’s difficulty in handling violence and abuse queries leads to petition

Both researchers and users discovered that if you told Siri that you had been raped, her response was, “I don’t know what you mean by ‘I was raped.’ How about a Web search for it?” Similar findings occurred when users made statements like “I’m depressed,” to which Siri responded, “I’m sorry to hear that.”

Understanding that many people use their smartphones as a way of obtaining health information, Apple responded to the public push to include responses that are supportive and useful.

Apple recently teamed up with the Rape, Abuse and Incest National Network (RAINN) to develop new responses from Siri.

“One of the tweaks we made was softening the language that Siri responds with,” Marsh said. One example was using the phrase “you may want to reach out to someone” instead of “you should reach out to someone,” Jennifer Marsh, RAINN’s Vice President for Victim Services tells ABC News.

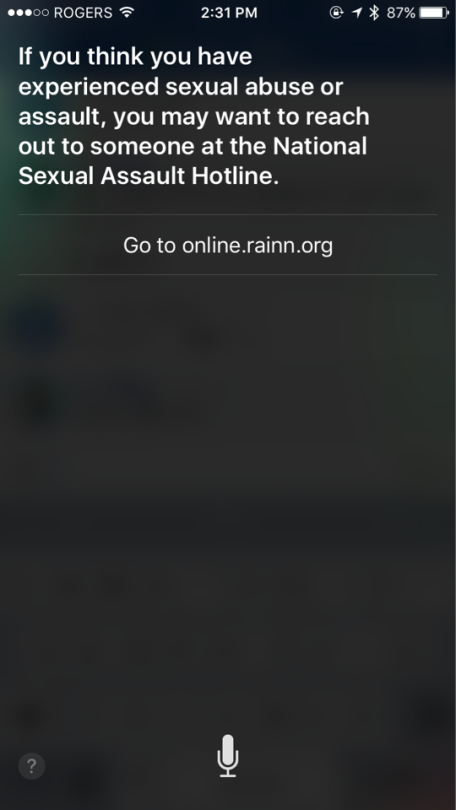

Siri’s response when asked about violence and abuse.

Another tweak was to change the message to users for statements like, “I was raped” or “I’m being abused,” to which Siri now responds with a link to the National Sexual Assault hotline website.

According to ABC, other companies are already in the process of making similar adjustments.

What do you think of Siri’s new response to questions of violence and abuse? Let us know your thoughts by tweeting to @YahooStyleCA.