Google just gave us a tantalizing glimpse into the future of AI agents

At the Google I/O conference, CEO Sundar Pichai teased where AI is headed next.

It's going to be all about agents that are better at reasoning and can act on our behalf.

What if Google did the searching for you?

What if the Google Assistant was actually … an assistant?

It's a question the company finally started answering Tuesday at the 2024 Google I/O conference, where the search giant fired off AI announcements by the dozen.

With Google and other artificial-intelligence companies making advances in how these systems can ingest images, videos, sound, and text, we're beginning to see how these systems are evolving from smart chatbots to more sophisticated tools that can do more of the hard work.

It's something Google CEO Sundar Pichai is thinking a lot about right now.

"I think about AI agents as intelligent systems that show reasoning planning and memory," Pichai said this week at a roundtable with reporters ahead of the developer show.

"They're able to think multiple steps ahead and work across software and systems, all to get something done on your behalf and, most importantly, with your supervision," he added.

In short: AI agents are what have the best chance at taking this technology from a nice-to-have to a need-to-have.

Project Astra

Pichai said Google was "in the very early days" of developing this but promised we'd see glimpses of "agentic direction" across Google's products at the conference. And there were several, but the big one people will be talking about is Project Astra.

Astra is a vision of what the Google Assistant should have been all along. You can also think of it a smarter version of Google Lens, one that uses real-time computer-vision capabilities to let you ask it questions about what you can see and hear around you.

"We've always wanted to build a universal agent that will be useful in everyday life," Google DeepMind's chief, Demis Hassabis, said. "Imagine agents that can see and hear what we do better, understand the context we're in, and respond quickly in conversation, making the pace and quality of interaction feel much more natural."

Google showed a demo of someone holding their phone up with the camera on and asking the AI voice assistant questions about what it was seeing. For example, they pointed it out the window and asked, "What neighborhood do you think I'm in?" Correctly, it located Google's King's Cross office in London.

Hassabis stressed that this demo video was recorded in real time. Google got a lot of blowback in December after a Gemini AI-model demo turned out to be edited, so Google needed to emphasize it could really do this — especially after OpenAI showed off a similar demo on Monday.

If there really is no manipulation here, Astra is certainly impressive, and will probably be the big takeaway of the show. But there are other ways these AI agents will emerge in the nearer term.

Google teased a combination of updates that would soon make its Gemini AI chatbot more capable and proactive. Some of this is being unlocked as Google continues to increase the context window, which is the amount of information a large language model can ingest at a time.

Say you want to know something buried deep in a series of very long documents that you don't want to spend hours sifting through. With a large context window, you could share all the documents with Gemini and then ask questions. The model would then answer quickly based on all the information it just ingested.

Tentacles and tailoring

But it's in its legacy products that Google really has an edge when it comes to enabling agentlike qualities.

With its "tentacles" already in many aspects of our lives, from email to search to maps, Google can synthesize all its knowledge about users and the world around them to not just answer queries but also tailor responses.

"My Gemini should really be different than your Gemini," Sissie Hsiao, the head of Gemini and Google Assistant, said.

Later this year, Gemini will be able to plan your vacation with a much more granular level of detail, Hsiao said. The idea is you'll be able to plug in all your specific demands (you like to hike, you hate it when it's too hot, and you're allergic to shellfish) and Gemini will return a detailed itinerary. Chatbots can already do this type of thing, but, if permitted, Gemini will have access to your flight information, travel confirmations in Gmail, and perhaps your hotel, and can use this to inform its answers.

"An AI assistant should be able to solve complex problems, should be able to take actions for you, and also feel very natural and fluid when you engage with it," Hsiao said.

Much of what agents will do is remove steps and shorten tasks. Google is also thinking about this when it comes to Search, as the company evolves its most precious product to spit out answers made using generative AI.

It's rolling out a version of Gemini built specifically for Search, combining its knowledge of the web with the AI model's multimodal abilities and giant context window.

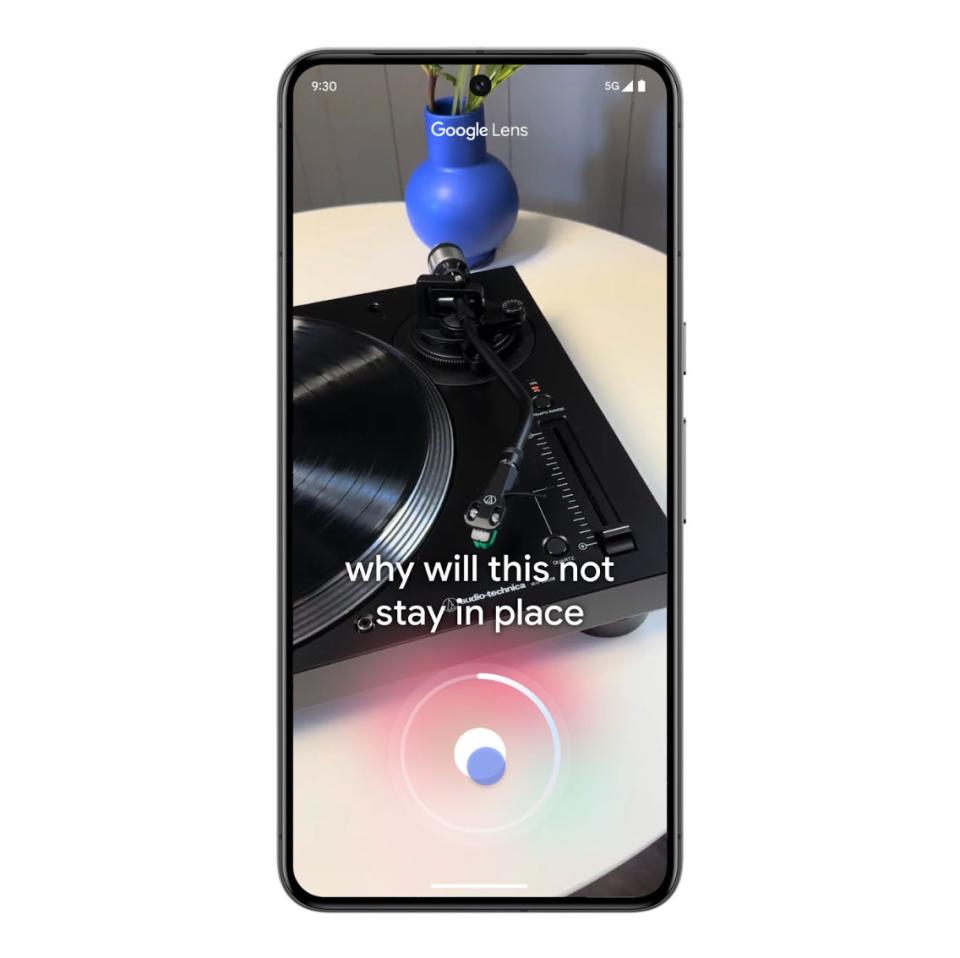

Liz Reid, Google's head of Search, showed an example of Google Lens being used to take a video of a record player. The user asks Gemini why the arm isn't staying in place. Google responds with exact instructions for that specific turntable.

As ever, Google has some confusing branding to solve: Lens, Assistant, Gemini, Astra. Ultimately, though, a lot of this eventually merges together. On Tuesday, Google gave us clues on what this logical conclusion would look like.

As Reid said, "This is a way for Google to do the searching for you."

Read the original article on Business Insider