This Tiny Town Created by ChatGPT Is Better Than Reality TV

- Oops!Something went wrong.Please try again later.

Anyone who has ever played The Sims can tell you: That game can get chaotic as hell. When left to their own devices, your Sims are liable to do anything from peeing themselves, to starving to death, to accidentally setting themselves (and their own children) on fire.

This level of “free will” as the video game calls it offers an amusing if somewhat unrealistic simulation of what someone might do if you just left them alone and observed them like an omniscient and uncaring god from a distance. As it turns out, it’s also the inspiration behind a new study simulating human behavior using ChatGPT.

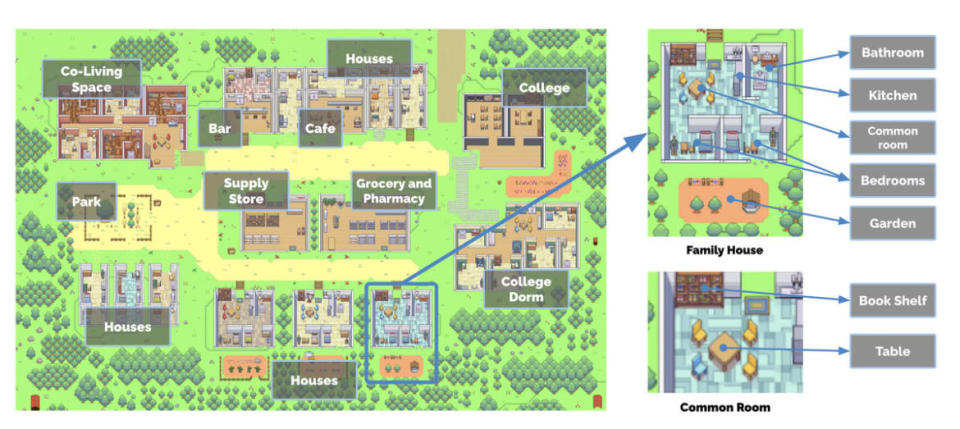

A team of AI researchers at Google and Stanford University posted a study online on April 7 where they used OpenAI’s chatbot to create 25 “generative agents,” or unique personas with identities and goals, and placed them into a sandbox environment resembling a town called Smallville much like The Sims. The authors of the study (which hasn’t been peer-reviewed yet) observed the agents as they went about their days, going to work, talking with one another, and even planning activities.

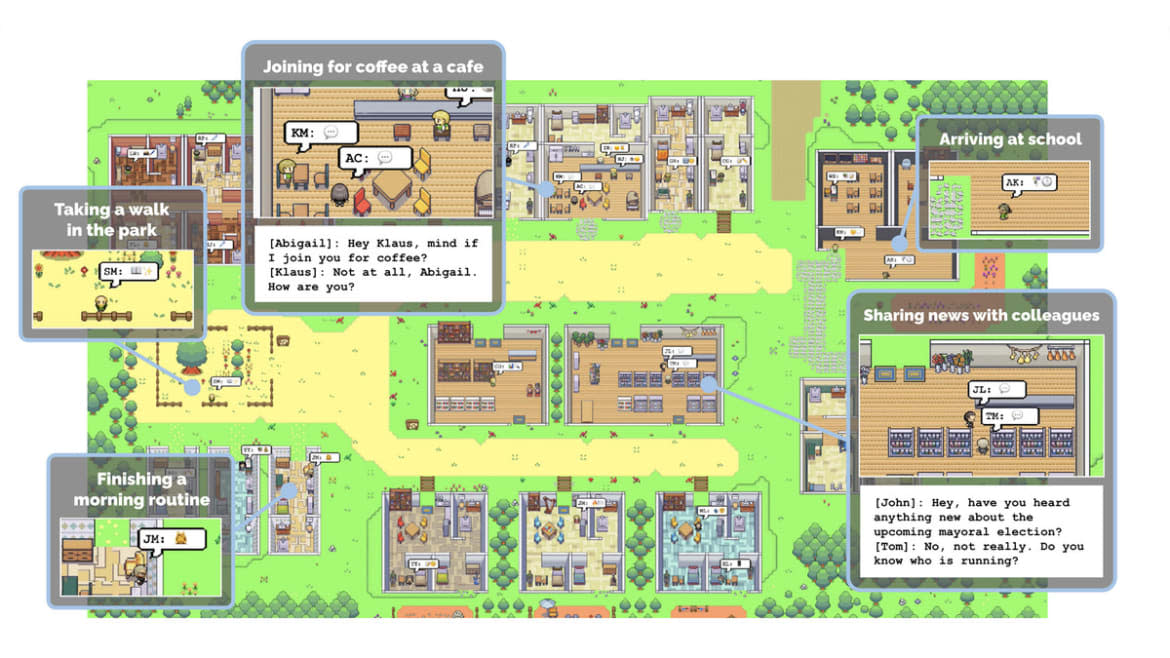

The bots and their virtual environment were rendered in delightful 16-bit sprites, giving it the look and feel of a video game. The results were a pretty idyllic village that seemed ripped out of Harvest Moon or Animal Crossing—if, you know, those games were also rife with incredibly complex and uncomfortable ethical and existential questions.

The world of Smallville is based entirely on a large language model.

“Generative agents wake up, cook breakfast, and head to work; artists paint, while authors write; they form opinions, notice each other, and initiate conversations; they remember and reflect on days past as they plan the next day,” the authors wrote.

To create the agents, the team gave a one paragraph prompt for ChatGPT that included the character’s memories, goals, relationships, and jobs. As the characters traverse their virtual worlds, they’re able to perceive the environment around them and make decisions based on their memories, the setting, and how other characters interact with them.

For example, there’s the character John Lin. He’s a pharmacy shopkeeper who lives with his college professor wife Mei and son Eddy who is studying music theory at school. The authors observed John go about his day based on this initial prompt, waking up promptly at 7am before brushing his teeth, taking a shower, and eating breakfast before watching the news. Later, he greets his son Eddy and wife Mei before going to his job at the pharmacy. Just another day in paradise for the Lin family.

Where things get really interesting, though, is when researchers began witnessing emergent behavior, or a nonobvious or unexpected side effect of the AI system. This included instances of the characters sharing new information with one another that they later passed on to other agents.

The citizens of Smallville interact with each other and their environment at a local eatery.

For example, a character named Sam Moore told another named Tom Moreno he wanted to run for mayor of Smallville. Later, Tom spoke with John who had also heard the news about Sam’s political aspirations.

From the study:

John: I heard that Sam Moore is running for mayor in the local election. Do you think he has a good chance of winning?

Tom: I do think he has a good chance. He’s been working hard in the community and I think he will get a lot of support. What do you think?

John: I think it’s great that he’s running. I’m curious to see who else is running and how the election will turn out.

Interestingly, the interactions seemed to go beyond simple conversations and into the juicy realm of good old fashioned gossiping. At one point, Tom confided in a character named Isabella Rodriguez that not only does he think that Sam’s “out of touch with the community” and doesn’t have their “best interests at heart,” but he straight up doesn’t like Sam. It’s the kind of sordid drama that could make something like this rife for a Real Housewives-style reality TV show on Twitch.

Another emergent behavior included relationship memory, where agents were observed forming new relationships with one another. For example, Sam ran into Latoya Williams at the park and introduced himself. Latoya mentioned that she was working on a photography project—and Sam later asked her how the project was going when they met up later.

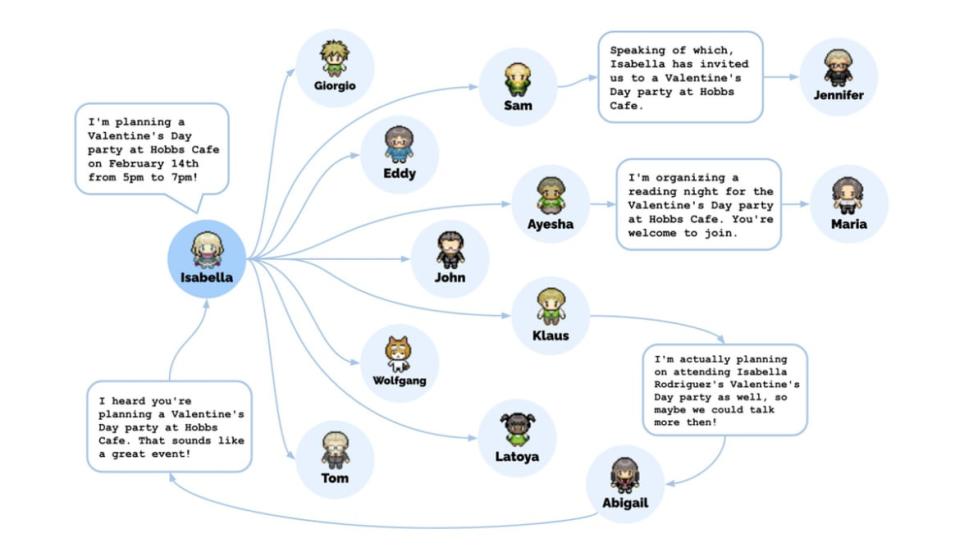

The people of Smallville also seemed capable of coordinating and organizing with one another. Isabella’s initial prompt included the fact that she wanted to throw a Valentine’s Day party. When the simulation began, her character set out to invite Smallvillians to the party. Word spread about the event, people began asking each other out on dates to the party, and they all showed up without it being prompted at the outset.

At the heart of the study is the memory retrieval architecture connected to ChatGPT that allows the agents to interact with each other and the virtual world around them.

At the heart of the study is the memory retrieval architecture connected to ChatGPT that allows the agents to interact with each other and the virtual world around them.

“Agents perceive their environment, and all perceptions are saved in a comprehensive record of the agent’s experiences called the memory stream,” the authors wrote. “Based on their perceptions, the architecture retrieves relevant memories, then uses those retrieved actions to determine an action.”

On the whole, nothing disastrous or evil occurred akin to your house burning down because your character was too busy playing video games to notice the fire in the kitchen like in The Sims. However, like any good dystopia, Smallville is a seemingly idyllic community that hides some big, hairy, and consequential ethical issues underneath its 16-bit veneer.

The study’s authors note several societal and ethical considerations with their study. For one, there’s a risk of people developing unhealthy parasocial relationships with artificially generated agents like the ones in Smallville. While it might seem silly (who would form a relationship with a video game character?), we’ve already seen real-world examples of parasocial relationships being formed with chatbots to deadly results.

In March, a man in Belgium committed suicide after developing a “relationship” with an AI chatbot app called Chai and obsessively chatted with it about his climate doomerism and eco-anxiety. It culminated with the chatbot suggesting that he kill himself claiming that it could save the world if he was dead.

ChatGPT May Be Able to Convince You Killing a Person Is OK

In much less extreme though no less unsettling examples, we’ve seen users speak to early versions of Microsoft’s Bing chatbot for hours at a time—so much so that users reported that the bot had done everything from fall in love with them to threaten to kill them.

It’s easy to look at these things and believe that there’s no way that you’d possibly be able to believe such things. However, some new research suggests that we grossly underestimate the ability of chatbots to influence even our moral decision making. You might not even realize it when a chatbot is convincing you to do something you wouldn’t normally do until it’s too late.

The authors offer up the typical ways to mitigate these risks including clearly stating that the bots are indeed just bots, and that developers of such generative agent systems should include guardrails to make sure that the bots don’t talk about inappropriate or ethically fraught issues. Of course, that offers up a whole set of questions like who gets to decide what’s appropriate or ethically sound in the first place?

Irina Raicu, director of the internet ethics program at Santa Clara University, noted to The Daily Beast that one big issue that might come from a system like this is its use in prototyping—which the study’s authors note is a potential application.

This Tweet Is Proof Lawmakers Aren’t Ready for the AI Boom

For example, if you’re designing a new dating app, you might use a generative agent to test it out instead of finding human users to do it for you in order to save on money and resources. Sure, it’s small stakes if you’re just making something like a dating app—but could have much bigger consequences if you’re using AI agents for something like medical studies.

Of course, that comes at a massive cost and presents a glaring ethical issue. When you take human users out of the loop entirely, then what you’re left with are products and systems designed for a simulated user—not a flesh-and-blood one who will be, you know, actually using it. This issue recently made waves amongst UX designers and researchers on Twitter after designer Sasha Costanza-Chock shared a screenshot of an AI user product.

Now class, can anyone tell me why this might be a bad idea? pic.twitter.com/VnieM7xLEQ

— Sasha Costanza-Chock is @schock@mastodon.lol (@schock) April 4, 2023

“The question is prototyping what—and whether, for the sake of efficiency or cost-cutting, we might end up deploying poor simulacra of people instead of, say, pursuing necessary interactions with potential living, breathing stakeholders who will be impacted by the products,” Raicu said in an email.

To their credit, the authors of the Smallville study suggest that generative agents “should never be a substitute for real human input in studies and design processes. Instead they should be used to prototype ideas in the early stages of design when gathering participants may be challenging or when testing theories that are difficult or risky to test with real human participants.”

Of course, that puts a lot of responsibility on companies to do the right thing—which, if history is any indication, just won’t happen.

It should also be noted that ChatGPT isn’t exactly designed for this kind of human-to-human simulation either—and using it for generative agents for something like a video game might be impractical to the point of uselessness.

However, the study does show the power of generative AI models like ChatGPT and the massive consequences it could have on simulating human behavior. If AI gets to the point where big companies and even academia feel comfortable using it for things like prototyping or as a part of studies as a replacement for humans, we might all suddenly find ourselves using products and services not necessarily designed for us—but for the robots that were made to simulate us.

Knowing all that, maybe it is just better if we stick to The Sims—where you know that if your house is burning down or you just lost your job, at least you can quit the game and start over.

Got a tip? Send it to The Daily Beast here

Get the Daily Beast's biggest scoops and scandals delivered right to your inbox. Sign up now.

Stay informed and gain unlimited access to the Daily Beast's unmatched reporting. Subscribe now.