Why University of Tennessee students can use AI for some homework next semester

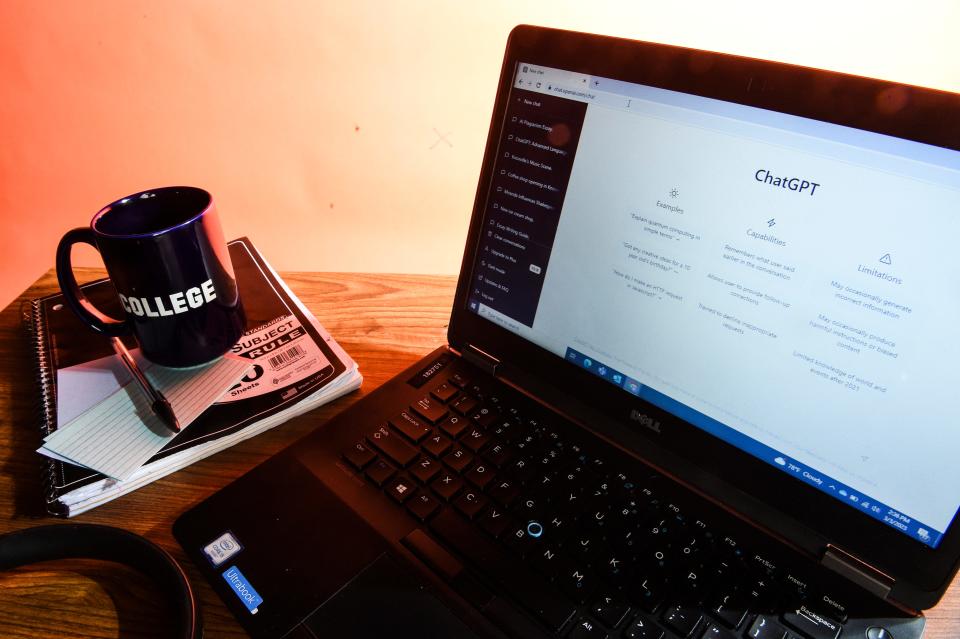

Artificial intelligence might seem scary. But the University of Tennessee at Knoxville will use it to improve the educational experience this fall.

The university has a plan for how teachers can use artificial intelligence in classes, and how students can learn more about the breakthrough technology through new classes.

Teachers can choose to use AI in their classrooms in three ways when UT welcomes its biggest student population yet, according to new guidelines for the 2023-24 academic year.

Open use: Students can use AI for any assignment as long as AI-generated materials are credited.

Moderate use: Students can use AI for specific assignments as long as the AI-generated materials are credited.

Strict use: Students are not allowed to use AI and using it would be considered academic dishonesty.

Associate Vice Chancellor Lynne Parker has been creating new courses about AI and hiring faculty in the field. UT experienced its first case of plagiarism with AI in the spring.

Parker serves as the director of the AI Tennessee Initiative. She worked in the White House Office of Science and Technology Policy from 2018 to 2022, and has worked in AI for nearly her entire career.

This interview has been lightly edited for length and clarity.

What are your thoughts on this current state of AI, with ChatGPT at the forefront?

Lynne Parker: I think people that haven't really had the opportunity to learn about it or haven't really thought about it see something like ChatGPT and think all AI is like that, and it's not.

It's important for people to be aware that there are a lot of different kinds of AI and it's used even in things like word processors to help improve your grammar, or the driver assist modules that are on your vehicle are effectively based on some early robotics work. There are a lot of systems that are out there that are leveraging AI.

In the last couple of years, there's been much more discussion around the potential harms that could be caused by various types of AI, and not considering the large language models or the generative AI, but just considering even basic formulas.

If people don't have agreed-upon processes for letting people know how decisions are being made about them, then people feel that effectively that the AI or the computers are taking over and humans are no longer accountable.

What are UT and the AI Initiative planning for next semester?

In the research area, we have some new faculty hires. Some of them are more fundamental AI faculty. Others are more at the intersection of AI with other disciplines: culture, health, transportation and so forth.

We have $1 million of seed funds that I'm awarding across the university to build up some new ideas in AI, particularly at the intersection with other disciplines.

We're beginning to teach some new AI classes next year.

We have a new AI 101 class that will be taught starting in the fall. That's intended for every student, particularly undergraduates on campus, and for anyone who wants to learn about AI. It's not a programming class, but it's about what we call AI literacy.

Then we'll have courses at the 400 level, which is for seniors, and the 500 level, which is for graduate students, tailoring these to be strongly interdisciplinary so that people learn about the topic from multiple perspectives and and not just the computer science or mathematics perspective.

We've partnered with The AI Education Project, which is a nonprofit that is helping us reach out to the teachers of junior high and high school students to help us provide some training on AI as well as some curricula that these teachers can use. Effectively, we're teaching the teachers.

There's also some more concrete things that we're doing as it relates to policies. We've had a number of committees that have been looking at ChatGPT and what it means for how it should be used in the classroom or in research, maybe what kind of policies we should have on campus as it relates to the use of ChatGPT.

How do you think AI changes the student experience?

I think what we're going to be finding is that in light of ChatGPT and AI tools that can be used in education, there are going to be two different angles to it.

One is AI that is being used in education. From a student experience perspective, it would be how the instructor (creates) assignments in ways that are maybe a little bit different.

Down the road ... AI that is being used in education to help tailor the personalization of the education. Now, you can begin to have an AI system that understands through your interaction with it what you know, but importantly what you don't know. And then the AI system perhaps can tailor its own questions, assignments or feedback that is specific to the areas based on your interaction with it.

That's AI that's being used to improve education. It is directly helping you in your learning process, versus AI as a tool where you're directing it. It's not learning about your knowledge and awareness, but you're using it to help you with some of your creative processes.

How do you think AI will change within the next few years, especially in academia?

It's becoming increasingly difficult to predict the future on these kinds of technologies.

That personalized approach to education will become more mature. It's not new. It's something that people have worked on for 10 or 20 years, if not more. But it's something that I think may become more mature so that we can actually leverage it in various ways.

You think about the introduction of the calculator and what that meant in classrooms and how you had fewer activities that involve you doing longhand math for instance. I wouldn't be surprised if we have some similar kind of change, that maybe the way that we introduce writing assignments might be different in light of everyone having access to ChatGPT.

What advice do you have for people who are either using AI or are just experimenting with it for the first time?

I would say exploring is a great way to learn. I think using the tools - so many of the tools are available for free - to explore and learn about them is a great way to to learn what's possible, maybe what some of the limitations are.

I think I would caution people against just reading headlines and reading some of … the more sensational side. Some people are saying pretty bold and broad statements that may or may not be true. I think people need to become their own judge, and by using some of these tools you can begin to learn what they can and can't do and and it helps increase your own education as well.

What do you hope to accomplish with the AI Initiative as it moves forward?

I would like to see increased investments across the state in the education activities and the research activities and then partnership activities, … so that we can work together more effectively to achieve this end (goal), which is where Tennessee is a leader in the data-driven knowledge economy.

I think that's going to require us all working together. It will require some investments, and the education and the rescaling of adult learners and providing opportunities for adults to learn basic fields.

We'd love to have new businesses that come to Tennessee that stress more of these skills. We would love to have within the existing businesses, to have people that are leveraging AI within those businesses, so that we're training all of our workforce to be able to engage in these kinds of opportunities. And of course, we want to leverage our research activities so that we're solving tough challenges that the state has.

How have you seen AI change over the course of your career?

Back in the '80s, it was still very science fiction-like. It was fascinating. It was difficult to get AI systems to do things that were anywhere near smart or human-like.

And then maybe 10 or so years ago, computers got faster and we have digitized the world. Researchers had tailored algorithms … so that they could be particularly useful for what we call data-driven AI.

What people discovered around 10 years ago is that you can apply this approach to finance, to various types of education, to various areas in engineering and business of all types. People were realizing that this type of approach - data-driven AI - is a good model for coming up with good applications.

I think most people did not realize that they were already using AI all the time, like Google Maps, Siri or recommender systems.

AI has become pervasive, but I don't think people really realized it was AI that was behind the scenes. When ChatGPT came along a few months ago, people suddenly realized, 'Wow, the capabilities of this AI system are much more than I imagined,' and it's true. I think the language capabilities of those models really opened up a lot of people's eyes.

What are your final thoughts on AI?

My take-home message is that AI is many things.

AI can be used for so many beneficial purposes. AI is being used now to help come up with new treatments and approaches to deal with cancer, for instance. New therapeutics for a variety of different diseases. It's being used to create climate-resistant crops in agriculture. It's being used to help improve how our cities operate.

You can pick any domain of interest and find ways where AI is helping improve productivity, improve efficiency, improve outcomes, improve quality of life, improve the economy, improve national security. There's so many beneficial uses of AI that we need to always keep those in mind and recognize that nearly all of us have been on the receiving end of those benefits.

At the same time, we want to always be responsible in how we approach AI and make sure that AI systems are not being used in some way that harms people, such as AI being used to make decisions about people's access to resources in ways that are not transparent and people don't understand what's going on. I think we have to constantly be cognizant of both the benefits and our responsibility to use it in a trustworthy manner.

Keenan Thomas is a higher education reporter. Email keenan.thomas@knoxnews.com. Twitter @specialk2real.

Support strong local journalism by subscribing to knoxnews.com/subscribe.

This article originally appeared on Knoxville News Sentinel: University Tennessee Knoxville students will learn about AI, ChatGPT